For a long time, cybersecurity was all about building a bigger wall. We relied on static, rule-based systems to catch threats, but let's be honest, those days are over. The old ways of fighting cybercrime just aren't cutting it anymore. Traditional security is like a security guard with a very specific, unchanging checklist. If a threat isn't on the list, it walks right in. This reactive approach is simply no match for the dynamic, sophisticated attacks we face today.

Why Old Cybersecurity Methods are Failing

Think of legacy security measures as a fortress with predictable defences. Your traditional firewall, for example, is a gatekeeper that only stops bad guys it already knows about. If a threat doesn't have a known "signature" or isn't on the predefined blacklist, it gets a free pass, even if it looks incredibly suspicious. This signature-based model worked well enough when threats were simpler and less frequent.

But the game has changed. Attackers now deploy polymorphic malware that constantly rewrites its own code to avoid being recognized. They craft phishing schemes so convincing they can fool even the most seasoned employees. These modern threats don't have a fixed signature, which makes old-school defences practically blind to them. On top of that, the sheer volume of data and alerts on any given network today is just too much for a human team to handle manually.

The Problem with a Fixed Playbook

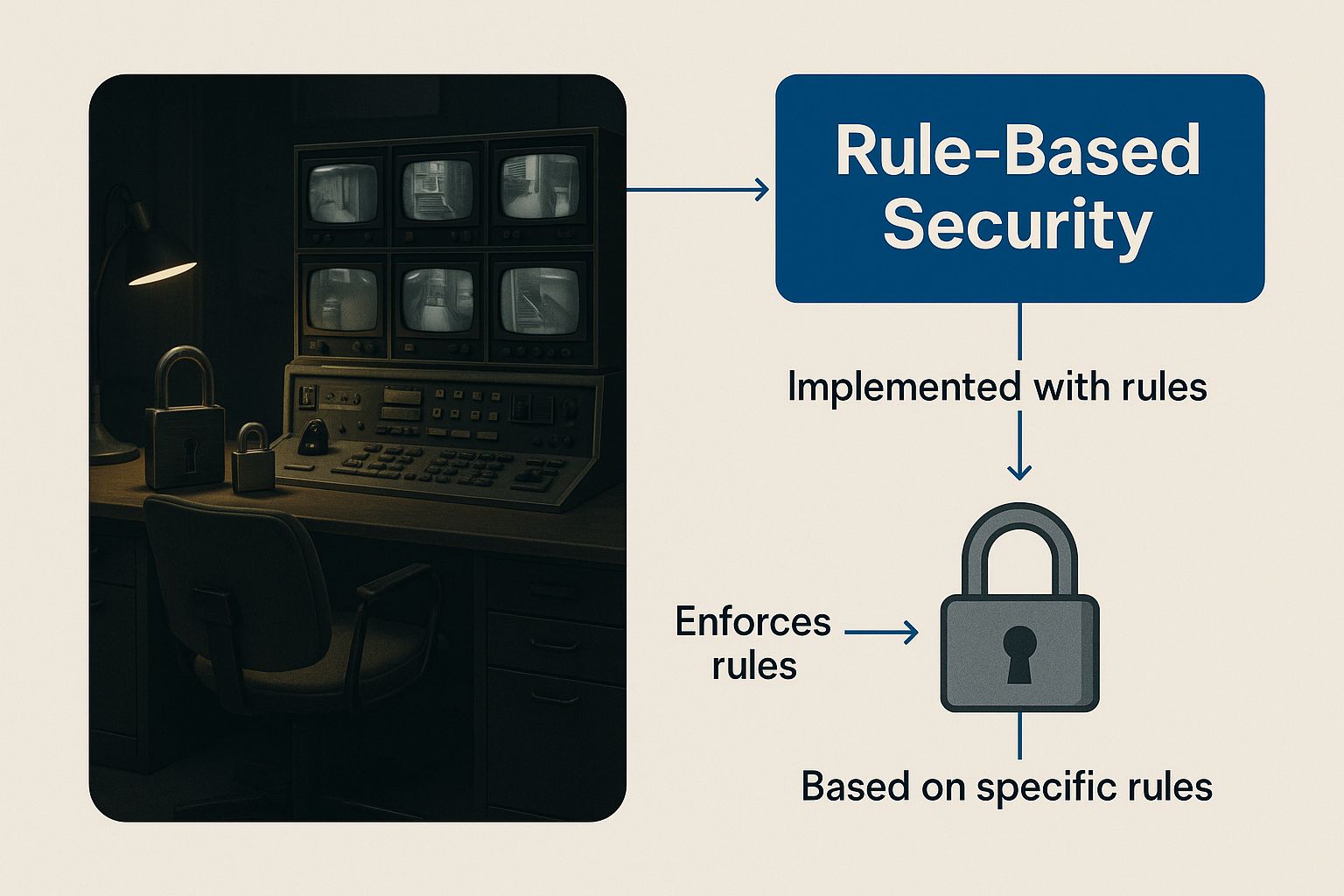

The fundamental weakness of rule-based systems is their inability to learn or understand context. They're completely helpless against "zero-day" threats: vulnerabilities that are brand new and have no patch available. An old system might successfully block a file with a known virus, but it would completely miss an attacker using legitimate system tools in an unusual way to quietly map out your entire network.

This infographic really highlights the rigid nature of these outdated security setups.

The image of a vintage control room full of padlocks is a perfect metaphor for the static, inflexible security that modern attackers can easily bypass.

This is precisely the problem that artificial intelligence for threat detection was built to solve. Instead of working off a fixed checklist, AI acts like a team of seasoned detectives who are constantly learning the unique rhythm of your organization. It gets to know what "normal" looks like, typical user behaviour, data flows, and network traffic patterns.

AI doesn't just look for known bad guys; it looks for unusual behaviour. This shift from reactive signature-matching to proactive anomaly detection is the most significant change in cybersecurity today.

The moment something deviates from that established baseline, even slightly, the AI flags it for a closer look. It can tell the difference between an employee accessing a sensitive file during work hours and the same login credentials being used to exfiltrate gigabytes of data at 3 a.m. from an unfamiliar location. To learn more about building a strong security foundation, check out our guide on software security best practices.

This adaptive intelligence is what makes AI essential for spotting the subtle, never-before-seen attacks that would breeze right past traditional defences. It transforms your security from a static wall into a dynamic, intelligent shield.

How AI Actually Learns to Find Threats

To get a real feel for artificial intelligence in threat detection, don't think of it as a single piece of software. It’s better to imagine it as a system that learns and adapts, much like a person does, only at an incredible speed. Instead of relying on a static list of known threats, AI works by developing a deep understanding of your network’s unique personality.

This learning is driven by two powerful concepts: Machine Learning (ML) and Deep Learning. These aren't just interchangeable terms; they're the core methods we use to teach a machine how to think critically about security.

Machine Learning: The Digital Apprentice

Think of Machine Learning as training a new security analyst. You start by feeding the ML model huge amounts of data, showing it examples of both "good" (safe traffic) and "bad" (malicious activity). For instance, you could show it millions of emails, each one carefully labelled as either "legitimate" or "phishing."

Slowly but surely, the model starts to pick up on the subtle traits of each category. It learns that phishing emails often use urgent language, contain suspicious links, or come from slightly off sender addresses. After seeing enough examples, it gets remarkably good at classifying new, unseen emails all on its own.

This approach is called supervised learning, and it’s fantastic for spotting threats that follow recognizable patterns. The catch? Its performance is entirely dependent on the quality and sheer volume of the data it's trained on.

Deep Learning: The Intuitive Expert

Deep Learning kicks things up a notch. It’s designed to function more like the neural networks in a human brain, allowing it to learn without needing every little thing spelled out for it. Rather than just matching known patterns, it can uncover hidden connections and develop an intuition for what just feels "wrong."

Here’s a simple way to look at it: Machine Learning spots a phishing email because its features match known phishing attacks. Deep Learning can spot a brand-new type of attack because the attacker's behaviour, the way they move through the network, is abnormal, even if the specific method has never been seen before.

This ability makes Deep Learning incredibly effective at catching zero-day exploits and complex attacks that unfold in multiple stages. It’s all about finding the "unknown unknowns." The interplay between these concepts is central to modern cybersecurity, and you can dive deeper into how AI and machine learning are applied in security engineering in our other guides.

At the heart of cybersecurity, several key AI technologies work together to create a formidable defence. Each plays a distinct role, from learning patterns to making complex decisions on the fly.

Core AI Technologies in Threat Detection

AI Technology | Core Function | Example Application |

|---|---|---|

Machine Learning (ML) | Learns from historical data to identify known patterns and make predictions. | Classifying emails as “spam” or “not spam” based on millions of past examples. |

Deep Learning (DL) | Uses multi-layered neural networks to find complex, non-obvious patterns in vast datasets. | Detecting a novel malware strain by analysing its subtle behavioral anomalies. |

Natural Language Processing (NLP) | Enables AI to understand, interpret, and analyse human language. | Identifying social engineering cues or malicious intent in phishing emails and messages. |

Behavioural Analytics (UEBA) | Establishes a baseline of normal user and entity behaviour to spot deviations. | Flagging an account that suddenly starts accessing unusual files at 3 a.m. |

These technologies don't work in isolation; they form a cohesive system that provides a multi-faceted view of potential threats, making the entire security operation more intelligent and responsive.

The Power of Anomaly Detection

Ultimately, both ML and Deep Learning feed into the crucial goal of anomaly detection. The AI builds a detailed, dynamic baseline of what "normal" looks like for every single user, device, and process on your network. This baseline covers everything:

The typical work hours for each employee.

Which departments usually access certain data?

Standard network traffic patterns between servers.

The common applications people use on their devices.

Any action that strays from this established baseline gets flagged as an anomaly. A user from the marketing team is suddenly trying to access sensitive financial records? That’s an anomaly. A server that has never communicated externally is suddenly trying to upload huge files to an unknown IP address? That's a massive red flag.

This kind of constant, real-time comparison is simply beyond what any human team could hope to achieve. In fact, studies show that 64% of organizations already rely on AI for threat detection. By sifting through billions of data points every second, AI acts as a tireless guardian that never sleeps, catching the faint signals that would otherwise be invisible.

The Real-World Impact of AI in Cybersecurity

It’s one thing to talk about AI in theory, but its true value really clicks when you see the tangible results in day-to-day security operations. Bringing artificial intelligence for threat detection into the mix isn't just another tool in the toolbox; it fundamentally changes how businesses defend themselves. It shifts the entire posture from being reactive and manual to something proactive, intelligent, and largely automated.

The most immediate benefit? A massive drop in incident response times. In a traditional Security Operations Centre (SOC), analysts are absolutely buried under a mountain of alerts, and a huge chunk of them are false alarms. This creates a serious problem known as "alert fatigue," which slows everyone down and, worse, can cause a genuine threat to slip through the cracks.

AI acts like a sophisticated filter, cutting through all that noise with precision. Because it understands the context behind an event, it can tell the difference between a harmless hiccup and a real indicator of an attack. This frees up the human experts to focus their energy where it’s actually needed.

A SOC Before and After AI

To paint a picture, imagine a typical SOC before AI. An analyst – let's call her Sarah – clocks in and is immediately faced with a queue of over 1,000 alerts. Her day is spent digging through logs, trying to manually connect the dots between data from different systems, and attempting to build a narrative for each potential incident. It’s slow, painstaking work that leans heavily on her own experience and gut instinct.

Now, let’s look at that same SOC after integrating an AI platform. Sarah still starts her day with alerts, but now there are maybe a dozen, and they're highly qualified. The AI has already sifted through thousands of low-level events, automatically weeding out the false positives and bundling related minor alerts into a single, high-confidence incident report.

Instead of a raw data dump, Sarah gets a prioritized case file. It comes complete with a suggested attack timeline, the likely entry point, and a list of all affected systems. The AI has done the grunt work, allowing her to jump straight into validation and remediation.

This is the ideal partnership between human and machine. AI doesn't replace the security analyst; it elevates them. It acts as a tireless partner that handles the tedious data crunching, freeing up human experts for strategy and critical decision-making.

This powerful collaboration transforms the security workflow, turning a constant firefight into a well-managed, proactive defence.

Measurable Improvements in Efficiency and Accuracy

The case for adopting artificial intelligence for threat detection isn't just anecdotal; it's backed by some pretty compelling numbers. The improvements aren't just incremental; they represent a massive leap forward in both speed and effectiveness. AI-driven systems deliver a clear return on investment by dramatically improving key security metrics.

The data speaks for itself. For instance, AI can boost threat detection efficacy by around 60%, helping organizations catch subtle threats that older methods would almost certainly miss. The impact on speed is even more dramatic, with AI-powered systems cutting incident detection and response times from an average of 168 hours down to just seconds. These kinds of results are why 64% of organizations, especially in Canada's tech hubs, now use AI for threat detection. For a deeper look at the data, you can discover more insights into the effectiveness of AI in cybersecurity.

These aren’t just abstract statistics. They translate directly into a stronger security posture and less business risk. Just look at these key areas of improvement:

Drastically Reduced False Positives: Because AI understands context, it generates far fewer false alarms. This can cut an analyst's workload by up to 80-90% in some cases.

Faster Threat Containment: The quicker you spot a breach, the faster you can contain it. AI helps shrink containment time from an average of 322 days in legacy environments to 214 days in AI-enabled ones.

Predictive Intelligence: The best AI models can even spot precursor activities to predict potential attacks, giving teams a chance to shore up defences before an attack is even launched.

By automating the heavy lifting of detection and serving up rich, contextual insights, artificial intelligence for threat detection lets security teams work smarter, not harder. It turns a confusing flood of data into a clear stream of actionable intelligence, giving organizations a fighting chance to stay ahead of increasingly sophisticated adversaries.

Putting AI-Powered Threat Detection Into Practice

It's one thing to talk about the theory, but seeing artificial intelligence for threat detection in action is where you really grasp its power. AI isn’t some magic, one-size-fits-all box; it’s a collection of smart techniques woven into the different layers of your security setup. From watching the chatter on your network to analyzing what individual users are doing, AI brings a level of vigilance that humans just can't match on their own.

To really see what it can do, let's look at how AI works in three crucial areas: network traffic analysis, endpoint security, and user behaviour analytics. Each gives us a different angle for spotting and stopping threats before they can do real damage.

Analyzing Network Traffic for Hidden Threats

Think of your network traffic as a busy highway. Your old-school security tools are like traffic police checking licence plates against a list of known wanted cars. They’re great at catching the usual suspects, but they’re completely oblivious to a driver with a clean plate who’s weaving erratically and scoping out every building they pass.

This is exactly where AI shines. It doesn't just look for known "bad guys." It learns the normal rhythm and flow of your network's highway, what kind of traffic goes where, at what times, and how quickly.

When AI spots something out of the ordinary, like a server suddenly trying to talk to an unknown address overseas or a strange amount of data being moved at 3 a.m., it raises a red flag. This kind of heads-up monitoring is fantastic for catching things like:

Data Exfiltration: Noticing large, unusual data transfers heading out of your network that could signal a thief is at work.

Lateral Movement: Detecting an attacker trying to hop from one compromised machine to another inside your network.

Command-and-Control Communication: Picking up on the quiet, sneaky signals a compromised computer sends back to an attacker’s server.

By understanding the why behind network activity, not just the what, AI can uncover the subtle clues of an attack that older systems would completely miss.

Securing Endpoints Against Malware

Every laptop, server, and phone connected to your network is an "endpoint," and each one is a potential doorway for an attacker. Traditional antivirus software mostly relies on what’s called signature-based detection. That means it can only stop malware it already knows about, a bit like having a photo of a criminal. This leaves a huge gap for zero-day attacks, which use brand-new malware that nobody has a photo of yet.

AI-powered endpoint detection and response (EDR) tools work differently. They watch the behaviour of programs running on a device. Instead of asking, "Does this file look like a virus I've seen before?" AI asks, "Is this program acting like a virus?"

For instance, an AI system might see a document that seems harmless at first. But the moment it’s opened, it tries to encrypt your files, shut down your security tools, and call home to a strange server. The malware’s code might be new, but its hostile actions are a dead giveaway. This behavioural analysis is absolutely critical for stopping modern ransomware before it can lock you out of your own systems.

Understanding User Behaviour to Spot Compromise

One of the sneakiest ways for an attacker to get in is to simply steal someone's password. Once they're logged in as a legitimate employee, they can wander around the network without setting off most traditional alarms. This is where User and Entity Behaviour Analytics (UEBA) is a complete game-changer.

UEBA platforms use AI to build a profile of what's normal for every single user and device. This baseline includes things like:

What time of day and from where do they usually log in?

Which files and applications do they access all the time?

How much data do they typically work with?

If a user account suddenly strays from that pattern, say, a developer in Toronto who works 9-to-5 suddenly logs in from a different continent at midnight and starts poking around in the finance department's folders, the AI flags it immediately.

This is the power of context. The AI doesn’t just see a valid login; it sees a valid login happening under highly suspicious circumstances. It can tell the difference between a dedicated employee working late and an attacker using their stolen credentials.

For a real-world look at AI in action, consider how California is centralizing cybersecurity for its public sector. The state’s Security Operations Center as a Service (SOCaaS) uses AI to provide 24/7 monitoring for a huge number of public agencies. Since it was created, its AI-driven detection has grown from covering 110 adversarial techniques to over 1,000, tackling the vast majority of common attack methods. This is a massive, coordinated defence that shows just how effectively AI can protect complex environments. You can learn more about how California's centralized model works.

How to Bring AI-Powered Security into Your Organization

Bringing artificial intelligence for threat detection into your security operations is a major move, but it doesn't have to be a painful one. Think of it less like flipping a switch and more like a carefully planned project. The real key to getting it right is laying the groundwork first, making sure your data is in good shape, and picking tools that actually play nice with the security systems you already have.

If you break the process down into manageable chunks, the whole thing becomes a lot less intimidating. It all starts with figuring out what you really need and ends with a system that's actively making your organization safer. Following a clear path helps you sidestep the usual headaches and ensures you’re making a smart investment.

First, Take a Hard Look at Your Current Security Setup

Before you even start window shopping for new tech, you need an honest assessment of where you stand right now. Get a crystal-clear picture of your biggest weaknesses and what’s eating up your security team's time. Are they constantly chasing ghosts from a noisy SIEM? Or are they swamped with a never-ending stream of endpoint alerts?

This initial reality check is what shapes your goals. When you know your specific pain points, you can zero in on AI solutions that solve the problems you actually have, instead of just grabbing the shiniest new toy off the shelf.

Pinpoint Your Biggest Risks: Figure out which of your assets are mission-critical and what kinds of threats keep you up at night.

Gauge Your Team's Bandwidth: Be realistic about your team's current workload and their skills. This will tell you whether you need a hands-off, fully managed solution or a tool your existing team can run.

Map Out Your Data: An AI system is useless without good data. Make a list of all your data sources, including firewall logs, endpoint data, and cloud service events, and get a sense of their quality.

Choosing the Right Tools and Partners

Once you've got your needs mapped out, it's time to find the right technology partner. The market for AI security tools is noisy, and frankly, a lot of them look the same on the surface. You have to dig deeper than the marketing buzz to find a solution that fits your specific needs, your team, and your budget.

A classic mistake is picking a tool that’s way too complicated for the team to actually use, or one that just won’t connect with the systems you already rely on. The whole point is to find something that makes security operations simpler, not adds another layer of complexity.

This is where you need to run a pilot or a proof-of-concept (POC). There's no substitute for testing a solution in your own environment, with your own data. A successful POC will show you a tangible drop in false positives, faster detection speeds, and give your team insights they can act on right away. This is also where true expertise in data science and AI development can make or break the project, as it ensures the AI models are actually tuned to the threats you face.

For many organizations, the big question is whether to build a custom solution or buy a ready-made one. Each path has its own trade-offs, from cost and control to how fast you can get up and running.

Comparing AI Threat Detection Implementation Models

Here’s a quick breakdown to help you weigh the options between building from scratch and buying a commercial solution.

Consideration | In-House Build | Commercial Solution (Buy) |

|---|---|---|

Initial Cost | Very high (salaries, infrastructure, R&D) | Lower upfront (licensing/subscription fees) |

Time to Value | Long (months to years for development) | Fast (weeks or even days to deploy) |

Customization | Complete control; tailored to your exact needs | Limited to the vendor’s features and roadmap |

Maintenance | Your team is responsible for all updates & tuning | Vendor handles all maintenance and updates |

Required Expertise | Needs a dedicated team of data scientists & engineers | Can be managed by existing security analysts |

Scalability | You are responsible for building for scale | Built to scale by the vendor |

Ultimately, the "build vs. buy" decision comes down to your organization's resources, timeline, and how unique your security needs really are.

Going Live: Deployment and Integration

After a successful pilot, you're ready for the full rollout. This means deploying the solution across the entire organization and weaving it into your daily security workflows. That integration piece is absolutely crucial. The AI tool needs to feed its findings directly into your SIEM or SOAR platform so you can automate responses and make your team's life easier.

Finally, don't forget that this isn't a "set it and forget it" kind of deal. You'll need to provide ongoing training so your team can get the most out of the new system. You also have to keep an eye on the AI's performance, tweaking the models as your network changes and new threats pop up. This ensures your artificial intelligence for threat detection stays sharp and effective for the long haul.

The Future of AI in Cyber Defence

Where is all this heading? Well, the role of AI in threat detection is about to get a lot bigger. We're moving away from AI as a simple reactive alarm system and toward something more like a proactive, predictive partner in our cyber defence.

The next wave isn't just about spotting unusual activity. It's about orchestrating automated responses and hunting for threats before they even get a chance to launch. This isn't just a nice-to-have upgrade; it's a flat-out necessity.

Cybercriminals are already using AI to craft smarter phishing attacks, build malware that’s harder to catch, and automate their own dirty work on a massive scale. This reality forces our hand, creating an urgent need for defensive AI that can learn, adapt, and outpace the opposition. The future of security is an AI arms race, and the only way to stay ahead is to keep innovating.

Emerging Trends in AI Security

The next generation of AI-powered security is all about greater autonomy and predictive muscle. We’re heading toward systems that don't just send an alert to a human analyst. Instead, they’ll take direct action to shut down a threat in real-time, closing the window of opportunity for attackers before they can do any real damage.

Here are a few key developments to watch:

Hyperautomation in Incident Response: AI will start managing the entire incident process. From the first flicker of detection and analysis all the way to containment and cleanup, AI will handle it, leaving human teams free to focus on bigger-picture strategy.

Proactive AI Threat Hunters: Picture AI agents that constantly patrol your network, thinking like an attacker. They’ll simulate attack paths and pinpoint weak spots long before a real threat actor finds them.

Generative AI for Defence: We'll see AI used to create incredibly realistic "honeypots" and other deception tactics. These will be designed to trap attackers, letting us study their methods in a safe, controlled sandbox.

The real game-changer here is the shift from detection to prediction. The ultimate goal is for AI to anticipate an attacker's next move and cut them off at the pass.

A huge part of this future involves advanced strategies like implementing Zero Trust security, which leans heavily on AI-driven insights for continuous verification. The core principle of "never trust, always verify" is a perfect job for an AI to enforce.

The investment in this future is already happening. By 2028, it’s projected that multi-agent AI systems will handle 70% of AI applications in threat detection. That’s a staggering jump from just 5% in 2023. This trend signals a clear and decisive move toward fortifying our digital walls with intelligent, automated defences.

Common Questions About AI in Threat Detection

Thinking about bringing AI into your security strategy can spark a lot of questions. It's a big step, after all. Let's walk through some of the most common ones I hear from business leaders and IT teams to clear things up.

Will AI Make My Cybersecurity Team Obsolete?

This is probably the biggest myth out there, and the short answer is a firm no. AI isn't here to replace your people; it's here to supercharge them. It acts as a force multiplier, taking on the monotonous, high-volume task of combing through mountains of data. This frees up your skilled analysts to do what they do best: high-level threat hunting, deep-dive investigations, and making those crucial, context-aware decisions.

Think of it like this: AI is the diligent scout that can scan the entire battlefield and flag every potential threat in seconds. Your human team are the seasoned commanders who interpret that intelligence, understand the bigger picture, and decide on the winning strategy. It's a partnership where AI provides the scale and speed, and humans provide the irreplaceable critical thinking.

How Much Data Does an AI Model Actually Need to Work?

The old saying "garbage in, garbage out" has never been truer than with AI. To be truly effective, an AI model needs to learn the unique rhythm of your specific environment. That requires a healthy diet of high-quality data from across your entire network.

We're talking about logs and telemetry from sources like:

Network gear: Firewalls, routers, and switches

Endpoints: Laptops, servers, and mobile devices

Cloud infrastructure: Applications and services

Identity systems: Your access and authentication logs

There isn't a single magic number for volume, but a good rule of thumb is to have at least several weeks, ideally a few months, of historical data. This gives the AI a rich, detailed baseline of what "normal" looks like for you, which is the absolute foundation for spotting anything that's out of place.

I'm a Small Business. What’s My Best First Step?

Jumping headfirst into building a custom, in-house AI security platform is a massive undertaking, especially for smaller businesses. A much more practical and effective starting point is to choose a security solution that already has powerful AI capabilities baked right in.

Modern Endpoint Detection and Response (EDR) platforms or Managed Detection and Response (MDR) services are fantastic options.

These tools give you all the advantages of AI-driven threat detection without the headache and expense of building and maintaining the complex infrastructure yourself. It’s a cost-effective way to immediately level up your defences.

How Can AI Possibly Stop a Brand-New, Zero-Day Attack?

This is precisely where AI truly flexes its muscles. Traditional antivirus and security tools rely on signatures, digital fingerprints of known threats. They are completely helpless against a zero-day attack because, by definition, its signature doesn't exist yet.

But artificial intelligence for threat detection isn't looking for a known fingerprint; it's watching for suspicious behaviour. It understands what normal processes look like and can immediately spot when something is acting maliciously, even if it has never seen that specific piece of malware before.

For instance, a new strain of ransomware might have unique code, but its actions, like rapidly encrypting files, attempting to delete backups, and trying to spread to other machines, are a dead giveaway. The AI sees this dangerous deviation from normal behaviour and can instantly trigger a response, like isolating the infected computer to stop the attack in its tracks. It's this focus on behaviour that makes it your best defence against the unknown.

Ready to see how AI-powered solutions can strengthen your security posture? Cleffex Digital Ltd builds custom software with intelligent security features designed for today's threat environment. Visit us to learn more.