Machine learning completely flips the script on cyber risk, moving security from a reactive, after-the-fact cleanup crew to a proactive defence. Instead of just blocking threats we already know about, it teaches algorithms to understand what's normal for your network. This way, it can spot and predict malicious activity before it ever has a chance to do damage. It's an adaptive defence that's essential for keeping up with today's ever-changing cyber threats.

Why Traditional Cybersecurity is Falling Behind

Think of old-school cybersecurity like a bouncer with a very specific checklist of troublemakers. This approach, known as signature-based detection, is great at stopping threats that have been seen and catalogued before. The problem is, its biggest strength is also its greatest weakness, it's blind to anything new.

In a world where cyberattacks are constantly evolving, that reactive stance is a massive liability. Attackers are always cooking up new malware and finding clever ways to sneak past defences, creating threats that have no signature. By the time a traditional system finally recognizes what’s happening, the damage is often already done.

The Shift from Reactive to Predictive Defence

This is where machine learning for cyber risk prevention changes the game. Forget the bouncer with a list; now imagine a savvy detective who’s learned to spot criminal patterns and can predict their next move. This detective isn't just looking for familiar faces. They're analyzing behaviours, flagging anomalies, and zeroing in on any activity that deviates from the established baseline.

That predictive power is precisely why integrating machine learning into your security framework is so valuable. The goal is no longer just to block known attacks but to get ahead of them, to anticipate and neutralize threats before they can even launch. In this guide, we'll walk you through exactly how this modern approach to digital defence works.

We’re going to cover:

Core Concepts: Getting to grips with the fundamental ideas behind predictive security.

Model Types: A look at the specific algorithms used for spotting threats.

Implementation Challenges: Understanding the real-world hurdles and how to clear them.

Industry Use Cases: Seeing how companies are putting these tools to work right now.

By moving from a defensive stance to a predictive one, organizations can dramatically shrink their attack surface and stay ahead of both known and unknown threats. Machine learning sifts through mountains of data to find the faint signals of an attack that a human analyst could easily miss.

This journey will give you a solid grasp of not just the 'what' but also the 'how' of using machine learning for security. You'll see how it transforms a simple barrier into an intelligent, adaptive system that’s ready for the sophisticated threats of tomorrow.

Understanding How Predictive Security Works

Let's cut through the noise and talk about what predictive security really means. At its heart, it's about teaching a system to think, learn, and anticipate threats; much like a digital detective on the lookout for trouble. Instead of just working off a static list of known criminals, it learns to recognise suspicious behaviour on its own.

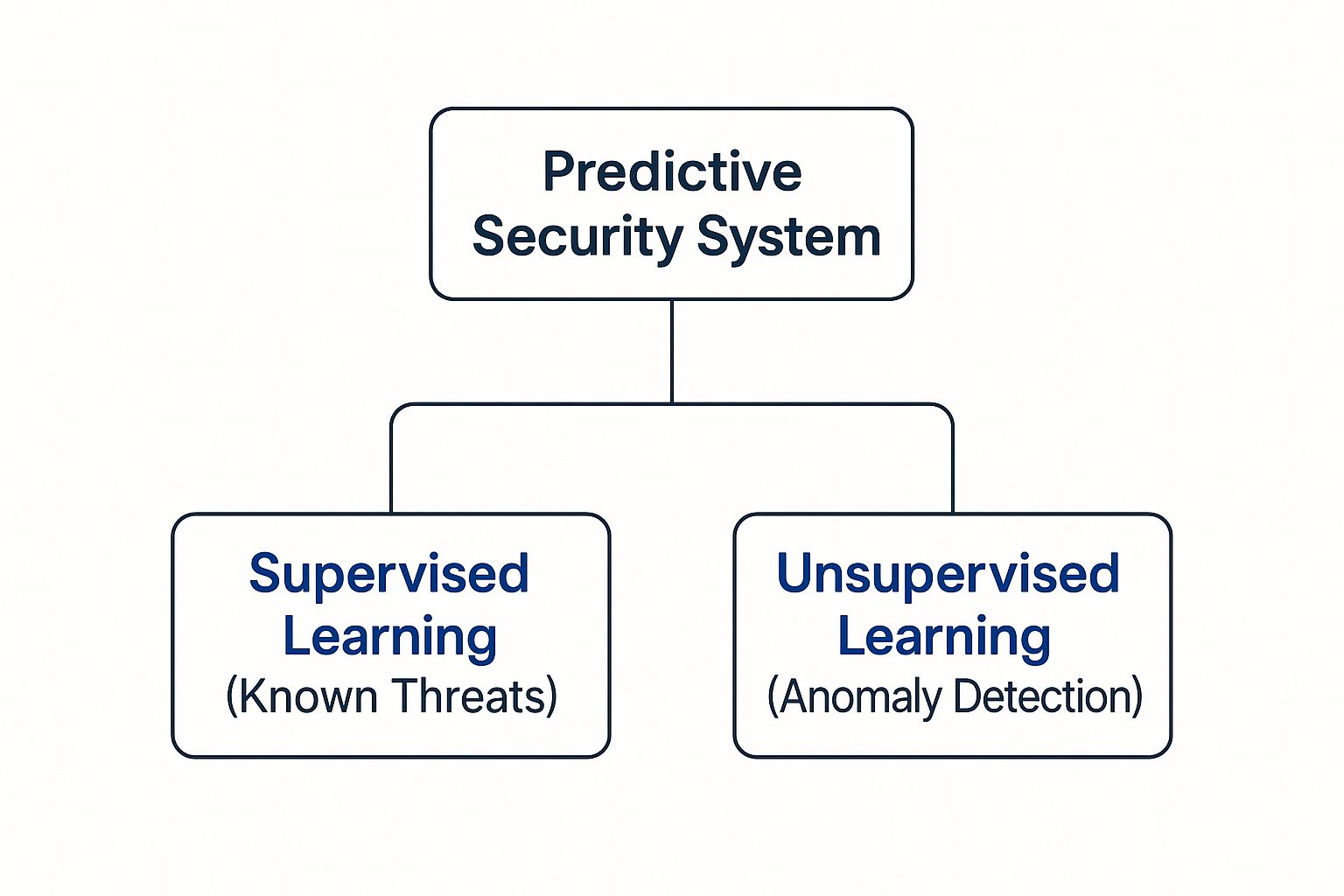

This learning process unfolds in two main ways: training the system with known examples and teaching it how to spot anything that looks out of the ordinary. When you combine both methods, you get a security system that can defend against threats it has never even seen before. This is what gives machine learning its predictive power, shifting security from a reactive chore to a proactive shield.

Supervised Learning: Teaching by Example

The first method is called supervised learning. Think of it like training a security dog. You show the dog scents from known dangerous materials (this is your "labelled data") and give it a treat when it correctly identifies them. Over time, that dog gets incredibly good at sniffing out those specific threats.

In cybersecurity, a supervised learning model is fed massive datasets of known threats. It might chew through millions of files, with each one clearly labelled as either "malicious malware" or "benign software." The algorithm studies the unique features of each, including code structure, file size, permissions requested, and learns to tell the good from the bad with incredible accuracy. This makes it a beast at catching new variations of existing threats.

Unsupervised Learning: Finding the Unknown

The second method, unsupervised learning, flips the script entirely. Imagine a security guard who has been monitoring the same building for months. They don't have a list of wanted suspects; instead, they have a deeply ingrained sense of what "normal" daily activity looks like, when people arrive, which doors they use, and where they typically go.

Then one day, someone tries to access a secure server room at 3 a.m. using a side entrance. The guard immediately spots this as an anomaly; a major break from the established pattern, and flags it as a potential threat. Unsupervised learning models do the same thing by analysing network traffic, user logins, and data access to build a baseline of normal behaviour. When an action strays too far from that baseline, the system sounds the alarm.

This ability to detect anomalies without any prior knowledge of a specific threat is the key to identifying zero-day attacks, brand-new exploits that have no known signature. It allows security systems to spot the behaviour of an attack, not just its fingerprint.

This infographic neatly shows how these two pillars support a modern predictive security system.

As you can see, supervised learning handles the knowns, while unsupervised learning tackles the unknowns by detecting anomalies. Together, they create a much more complete defence.

From Signatures to Behaviours: A Practical Look

So how does this actually look in practice? The classic example is your email spam filter, which cleverly uses both approaches:

Initial Training (Supervised): The filter is first trained on millions of emails already marked as "spam" or "not spam." It learns to spot common spam characteristics, like suspicious links, urgent subject lines, or weird formatting.

Adaptive Learning (Unsupervised): As you go about your day, the filter watches your personal habits. It learns who you talk to regularly and what kind of content you usually receive. If an email suddenly shows up from a strange sender at an odd time with an unusual attachment, it flags it as an anomaly, even if it doesn't match a known spam signature.

This move away from simply matching known "bad" signatures to truly understanding malicious behaviours is the fundamental shift that machine learning brings to the table. We’re no longer just building a higher wall; we're creating an intelligent guard that can outthink attackers.

To see how this all comes together, it’s worth comparing the old way with the new.

Comparing Traditional vs Machine Learning Cybersecurity

Aspect | Traditional Cybersecurity | Machine Learning Cybersecurity |

|---|---|---|

Detection Method | Signature-based; relies on a database of known threats. | Behaviour-based; identifies anomalies and patterns. |

Response to New Threats | Reactive; cannot detect threats until a signature is created. | Proactive; can identify zero-day attacks and novel threats. |

Human Involvement | High; requires constant manual updates and rule creation. | Low; models learn and adapt automatically from new data. |

Scalability | Limited; performance can degrade as the threat database grows. | Highly scalable; can process vast amounts of data in real time. |

False Positives | Can be high with poorly defined rules. | Lower over time as the model learns what is “normal.” |

Ultimately, the goal is to build a security posture that is both resilient and predictive. Services like managed endpoint detection and response (MDR) are prime examples of how these advanced, AI-driven techniques are put into action to proactively hunt down and neutralise threats before they can cause damage.

A Look Inside the Toolbox: Key Machine Learning Models for Threat Detection

Now that we've covered the basics of supervised and unsupervised learning, we can pop the hood and look at the engines driving modern threat detection. These machine learning models are the specific algorithms that do the heavy lifting, such as sifting through mountains of data, finding the patterns, and flagging trouble.

Think of these models as different specialists on a security team. You wouldn’t ask a network architect to analyse malware, and you wouldn't assign a forensics expert to manage firewall rules. In the same way, picking the right algorithm is a huge part of making machine learning for cyber risk prevention work effectively.

Let's dive into some of the most powerful and widely used models in the field.

Decision Trees and Random Forests

Imagine a simple flowchart that asks a series of yes-or-no questions to decide if an email is a phishing attempt. That's the basic idea behind a Decision Tree.

For example, the model might ask:

Does the email create a sense of urgency? (Yes/No)

Is the sender's domain one we've never seen before? (Yes/No)

Are there links where the displayed text doesn't match the actual URL? (Yes/No)

By following the branches of these questions, the tree ends up classifying the email as either "legitimate" or "phishing." While a single decision tree is straightforward and easy to interpret, it can sometimes be a bit too rigid and prone to errors.

That's where the Random Forest comes in. Instead of trusting just one flowchart, a Random Forest builds an entire committee of them, often hundreds or thousands of individual decision trees. Each tree gets a slightly different slice of the data and casts its "vote" on the final outcome. This "wisdom of the crowd" approach makes the model far more accurate and robust, smoothing out the biases of any single tree. It's a real powerhouse for tasks like classifying malware families or identifying malicious network requests.

Clustering Algorithms for Anomaly Detection

Random Forests are fantastic at classifying known threats, but what about the things you’ve never seen before? This is where unsupervised models, especially clustering algorithms, really get a chance to shine. The most common one you'll hear about is K-Means Clustering.

Picture a security analyst trying to get a handle on normal user behaviour. The K-Means algorithm helps by automatically grouping similar activities into clusters without needing any pre-existing labels. It might create a cluster for "typical weekday office activity," another for "remote weekend access," and a third for "automated system processes."

Now, let's say a user account suddenly starts doing things that don't fit neatly into any of those established groups, like accessing sensitive files at 3 a.m. and trying to export a massive amount of data.

Because this behaviour is a major outlier from any known cluster, the algorithm flags it as an anomaly. This is how unsupervised learning becomes your first line of defence against zero-day threats and insider risks, by spotting any strange deviation from the norm.

This exact technique is the foundation of many User and Entity Behavior Analytics (UEBA) systems, which are crucial for catching compromised accounts before serious damage is done.

Neural Networks and Deep Learning

When you're up against the most complex and subtle threats, cybersecurity teams turn to Neural Networks, the technology that underpins deep learning. These models are inspired by the structure of the human brain, built with interconnected layers of "neurons" that can learn incredibly intricate patterns from enormous datasets.

If a basic algorithm is like a hammer, a neural network is more like a highly advanced, self-calibrating robotic arm. It can adapt its approach to handle extremely nuanced tasks, such as:

Detecting Advanced Persistent Threats (APTs): Spotting the low-and-slow, coordinated movements of a sophisticated attacker moving laterally across a network.

Analysing Malicious Scripts: Figuring out the true intent behind obfuscated code in a way that simpler models just can't.

Natural Language Processing (NLP) for Phishing: Going beyond simple keywords to analyse the tone, sentiment, and linguistic tricks in an email to catch highly convincing spear-phishing attacks.

The "deep" in deep learning simply refers to networks with many, many layers. This depth allows them to learn hierarchical features, like the first layer might identify raw data points, the next might recognise simple patterns, and deeper layers can combine those patterns to understand complex malicious strategies. Building and fine-tuning these advanced models is a key focus of modern data science and AI development services, which create these custom "brains" to solve specific security challenges.

By understanding these core models, you get a much clearer picture of how a modern security strategy is built, layer by layer, to defend against an ever-widening spectrum of cyber risks.

Putting a Machine Learning Security System into Practice

Bringing machine learning into your cybersecurity toolkit isn't like installing a new antivirus program. It's a fundamental shift that demands careful planning, continuous oversight, and a new way of thinking about threat detection. A successful rollout hinges on three things: preparing your data, navigating the operational hurdles, and building a system where human insight and machine intelligence work in tandem.

One of the first challenges you'll almost certainly run into is the signal-to-noise ratio. In the early days, an ML model can be a bit noisy, flagging a high number of false positives, alerts on perfectly legitimate activity. Without careful tuning, this flood of notifications can bury your security team and lead to "alert fatigue," where the truly critical warnings get lost in the shuffle.

Don't panic. This is a normal part of the process. Your models need time and real-world feedback to learn the unique pulse of your network. The trick is to approach this not as a one-and-done setup, but as a continuous cycle of training, testing, and fine-tuning.

The Human-in-the-Loop Advantage

The best way to wrangle this complexity is by using a "human-in-the-loop" model. Think of it as a strategic partnership between your security analysts and the algorithms. This isn't about replacing people; it's about amplifying their skills.

In this setup, the machine learning system is your first line of defence. It does the heavy lifting, sifting through millions of data points in real time: a scale no human team could ever match. It automatically handles the obvious threats and filters out the low-risk noise, which immediately frees up your experts.

When the algorithm spots something tricky, a high-stakes anomaly or an ambiguous event, it escalates the alert to a human analyst. These are the situations that demand intuition, context, and creative problem-solving. This keeps your team focused on what they do best: investigating genuine threats that require human judgment. Suddenly, machine learning goes from being a source of noise to a powerful force multiplier for your team. This whole strategy works best when it's built on a foundation of proven essential software security practices.

Taming the False Positive Beast

Let's be honest: the problem of false positives has held back machine learning in security for years. Early systems were often notoriously inaccurate, and security pros grew tired of chasing down ghosts.

But the technology has come a long way. Research from Stanford University on cyber risk models highlights this progress. While older systems sometimes had false positive rates hitting 50%, a modern, collaborative approach dramatically changes the game. The study champions a "collaborative decision paradigm" where machines handle the clear-cut alerts, and humans step in when the risk or uncertainty is high. This approach, tested by several California-based firms, led to a 30–40% drop in missed threats and a 20–25% reduction in pointless alerts. You can dive deeper and read the full Stanford research on collaborative AI security.

The chart from the study below perfectly captures how this decision framework works, balancing automation with human expertise.

As you can see, alerts get sorted based on confidence and potential impact. This ensures your experts are spending their time only on the threats that are both critical and uncertain.

Ultimately, managing an ML security system effectively comes down to a constant feedback loop.

Deploy and Monitor: Get the models running and keep a close eye on how they perform right out of the gate.

Gather Feedback: Your analysts investigate the escalated alerts, labelling false positives and confirming real threats. This is where the human insight comes in.

Retrain and Refine: That feedback gets funnelled back into the system to retrain the models, making them smarter and more accurate with every cycle.

This iterative process steadily drives down false positives and sharpens the system's ability to spot real danger. It builds trust, boosts efficiency, and ensures your machine learning strategy evolves right alongside your organisation and the threats you face.

Real-World Examples of AI in Cybersecurity

It’s one thing to understand the models and methods behind the curtain, but seeing machine learning for cyber risk prevention out in the wild is where its real value becomes clear. This isn't just theory anymore; it's technology that's actively defending company networks and protecting sensitive data around the clock, all over the globe.

Let's dive into a few concrete examples where machine learning offers a serious upgrade to a company's security posture. These use cases show how ML moves beyond old-school, rigid rules to provide a much smarter, more adaptive defence by analysing behaviour, context, and subtle patterns that would otherwise go unnoticed.

Intelligent Phishing Detection

We’ve all seen traditional email filters. They often rely on flagging basic keywords or checking sender addresses against a blocklist. That's a good first step, but clever attackers can easily get around it by using sophisticated language or spoofing credentials.

This is where machine learning comes in. Instead of just scanning for words like "urgent" or "invoice," these systems take a much deeper look. They use Natural Language Processing (NLP), a subset of AI, to examine the linguistic patterns, tone, and even the sentence structure of an email to spot the subtle clues of a phishing attempt.

On top of that, the models build a profile of what normal communication looks like for your organization. They learn things like:

Who typically emails whom.

The usual times of day people send messages.

What kinds of links and attachments are standard for different teams.

So, when an email supposedly from the CEO arrives at 3 a.m. with a strange link, the system flags it as high-risk, even if it sailed past the traditional keyword filters. It’s that deviation from the established norm that gives the game away.

Network Intrusion Detection Systems

Think of your network traffic as a bustling city highway. A classic Intrusion Detection System (IDS) is like a police officer checking license plates against a list of known stolen cars. It works, but it’s completely blind to anything not on that list.

An ML-powered IDS, on the other hand, is more like an experienced traffic controller who has a feel for the entire city's rhythm. It uses unsupervised learning to establish a baseline of normal network activity, which servers talk to each other, the typical volume of data being sent, and the protocols being used.

By understanding the baseline of "normal," the system can instantly spot anomalies that signal a breach in progress.

This could be anything from a server making an unusual outbound connection to a sudden spike in encrypted traffic heading to an unknown destination. It can even spot patterns that suggest an attacker is moving laterally inside the network. This kind of behavioural analysis is absolutely critical for catching stealthy, "low-and-slow" attacks designed to fly under the radar of signature-based tools.

User and Entity Behaviour Analytics

One of the trickiest challenges in security is telling the difference between a legitimate user and a compromised account. This is where User and Entity Behaviour Analytics (UEBA) shines, providing a powerful defence against insider threats and account takeovers.

UEBA systems use machine learning to build a unique behavioural fingerprint for every user and device on the network. This profile is incredibly detailed, including things like:

Login Patterns: Typical login times, geographic locations, and the devices they use.

Data Access: The types of files and servers the user normally interacts with.

Application Usage: The software and tools they engage with day-to-day.

If a user's account suddenly starts acting out of character, say, logging in from a new country, accessing sensitive HR files for the very first time, and trying to download huge amounts of data, the UEBA system flags this as a high-risk event. This gives security teams a crucial heads-up, allowing them to investigate and lock down a potentially compromised account before a major breach occurs. In sectors like finance and healthcare, this kind of proactive monitoring isn't just a nice-to-have; it's essential.

Building a Complete Cyber Defence Strategy

While rolling out a machine learning system is a massive step forward, it’s not a silver bullet. The best way to think about it is as one powerful layer in a much larger defensive system, not a magical fix for every problem. After all, a model might be brilliant at predicting a complex, multi-stage attack, but it can’t stop a stressed employee from clicking on a very convincing phishing email.

This is exactly why your technical defences have to work hand-in-hand with human-focused security efforts. Even the most sophisticated machine learning for cyber risk prevention is a house of cards if you ignore the human element, which is still the number one target for most attackers. A clever social engineering ploy or a simple mistake can walk an attacker right past the most expensive digital walls.

Unifying Human and Machine Intelligence

So, what does a truly effective strategy look like? It's one that blends the predictive muscle of your algorithms with the on-the-ground awareness of a well-trained team. The whole point is to create a unified front where human intuition and machine efficiency make each other stronger.

This integrated approach really comes down to a few key areas:

Robust Training Programs: This means regular, engaging cybersecurity awareness training that keeps employees up-to-date on the latest threats they’re likely to see.

Clear Incident Response Plans: When an alert fires, whether it's from an algorithm or a person, everyone needs to know exactly what to do and who to call.

Shared Threat Intelligence: This is about creating a feedback loop. When an employee spots something suspicious, that intelligence should be used to sharpen the ML models. Likewise, when a model detects a new pattern of attack, that information needs to get out to the team in security briefings.

Take the University of California system as a real-world example of this blended approach. As of 2024, they managed to get 90% of their faculty and staff through cybersecurity awareness training, showing a serious commitment to shoring up human-centric risk. This runs in parallel with their investment in AI-driven security tools, proving that both sides of the coin, human and machine, have to evolve together to stand a chance against modern threats. You can read more about California's integrated approach to cyber risk.

A resilient security framework isn't just about having the best tools; it's about creating a culture of security where technology empowers people, and people make technology smarter.

While machine learning gives us an incredible advantage in predicting threats, a solid defence still needs proactive measures against common attack methods. For example, your ML models should be supplemented with a practical guide to preventing ransomware attacks to ensure you’re covering both automated detection and solid procedural defences.

Ultimately, bringing machine learning into your security stack forces you to take a hard look at your entire defensive posture. Our complete guide to business cybersecurity and risk management dives deeper into building this kind of multi-layered, adaptive defence, one that prepares you for the threats you're facing today and the ones that are just around the corner.

Frequently Asked Questions

It's completely normal to have questions when you're looking at bringing machine learning into your cybersecurity strategy. This approach is a world away from the old, signature-based methods, so let's clear up a few common points.

Think of these answers as practical advice, whether you're just starting to explore these tools or are already using them.

How Much Data Do ML Models Need to Be Effective?

That’s a classic “it depends” question. The answer really hinges on the complexity of the problem you're trying to solve. For something straightforward like spotting basic spam emails, a few thousand examples might be all you need to get a model up and running effectively.

But for a far more complex challenge, like network anomaly detection, you’re talking about a different scale entirely. You could need millions of data points just to teach the model what "normal" traffic looks like. The crucial takeaway here is that quality trumps quantity. Your data has to be clean, well-labelled (especially for supervised models), and a true reflection of your actual environment. Otherwise, you risk building biases into the model, making it blind to real threats.

Can Machine Learning Adapt to New Zero-Day Attacks?

Absolutely, and this is arguably one of its biggest strengths. While a supervised model trained only on known threats might miss a brand-new attack vector, this is where unsupervised learning truly shines.

Unsupervised models aren't looking for specific, known-bad signatures. Instead, they focus on establishing a rock-solid baseline of what "normal" looks like across your systems and user activities. When anything deviates significantly from that established pattern, it gets flagged as a potential threat. This is precisely how they can spot the strange, unprecedented behaviour of a zero-day attack without any prior knowledge of it.

What are the First Steps for a Small Business?

If you're a small business, don't try to reinvent the wheel. The most practical and effective path is to adopt security tools that already have machine learning built in. Building custom models from the ground up requires a level of resources, time, and niche expertise that most small businesses simply don't have.

Luckily, many of today's best security products come with ML-powered detection right out of the box. You'll want to look at:

Endpoint Detection and Response (EDR): These are tools that keep a close eye on laptops, servers, and other devices for any signs of suspicious activity.

Next-Generation Firewalls (NGFW): Think of these as a major upgrade to traditional firewalls; they inspect network traffic with a much deeper level of intelligence.

Advanced Email Security: Modern email gateways use machine learning to get remarkably good at catching those sophisticated phishing scams that trick even savvy users.

A great starting point is to pinpoint your biggest risk area, is it phishing, ransomware, or something else? Then, find a best-in-class tool that uses machine learning to tackle that specific problem. This gives you a massive security upgrade without needing to hire a data science team.

Ready to build a smarter, more resilient defence for your business? Cleffex Digital Ltd specialises in developing custom software and AI-driven solutions that solve complex challenges. Discover how we can help you integrate intelligent security into your operations by visiting us at Cleffex.