The digital wave has completely reshaped how Canadian insurance companies operate. While this has brought incredible efficiencies, it's also thrown open the doors to a new breed of sophisticated cyber threats. With so much of the business now running on technology, using AI in the insurance industry isn't just a forward-thinking move. It's a critical part of strengthening cybersecurity.

The real challenge for insurers today is striking a delicate balance: how to keep pushing forward with digital innovation without leaving themselves vulnerable. This is precisely where artificial intelligence (AI) and machine learning (ML) are becoming essential tools for defence.

The New Cybersecurity Battlefield for Canadian Insurers

The Canadian insurance sector now operates in a high-stakes digital arena. Every single policy, claim, and customer touchpoint creates a trail of valuable data, and that data is a magnet for cybercriminals. The old way of doing things, waiting for a breach to happen and then scrambling to fix it, is a recipe for disaster. That reactive mindset simply can't keep up with automated attacks that can inflict millions in damages in the blink of an eye.

This new reality calls for a complete overhaul of security strategy. It's no longer enough to just build a taller digital wall around the company. Insurers need smart systems that can see threats coming, flag them, and shut them down before they ever cause damage. It’s about shifting from playing defence to actively anticipating your opponent's next play.

The Urgency of a Proactive Defence

At the heart of this new battlefield is data. Insurers are custodians of a massive amount of sensitive personal and financial information, which makes them an incredibly attractive target. A single successful breach can have a catastrophic domino effect:

Financial Losses: Think regulatory fines, hefty legal bills, and the direct costs of cleaning up the mess.

Reputational Damage: Trust is everything in insurance. A data breach can shatter that trust instantly, sending customers running to competitors.

Operational Disruption: A well-placed attack can bring critical systems to a grinding halt, stopping everything from processing claims to writing new policies.

The core challenge for today's insurers isn't just about adopting new tech for the sake of efficiency. It's about deploying intelligent systems as mission-critical defensive tools. The focus has to shift from reactive damage control to proactive threat prevention.

AI and Machine Learning as Essential Allies

This is precisely where Artificial Intelligence (AI) and Machine Learning (ML) come in. They aren't just another tech upgrade; they are the bedrock of a strong, modern security posture. Picture AI as a tireless security guard, one that can watch millions of data points at once and spot the tiny, almost invisible signs of trouble that a human analyst would easily miss.

To get ahead and successfully navigate this new landscape, Canadian insurers are turning to advanced AI solutions, like an AI-powered financial insights dashboard, to guide their strategic decisions and uncover new opportunities in the market.

These technologies make it possible to move from a passive, defensive stance to an active, predictive security model. By constantly learning from historical attack patterns and analysing network activity in real-time, AI and machine learning can forecast where the next threat might come from and automate a response with incredible speed and accuracy.

This guide will dive into how AI and machine learning are not just patching security holes but building a formidable, intelligent defence against modern cyber threats in the insurance industry.

How AI and Machine Learning Protect Insurance Data

To really understand how AI and machine learning are strengthening cybersecurity, it's helpful to stop thinking of them as a single piece of technology. Instead, picture them as a team of highly specialized digital experts, all working together perfectly. Each one has a specific job, from spotting suspicious activity as it happens to predicting where the next attack might come from. Together, they form an intelligent, lightning-fast security shield.

At their core, AI and ML work by digging through massive amounts of data, things like network traffic, user logins, and application use, at a speed and scale no human team could ever match. The first job is to establish a baseline of what "normal" looks like for your insurance company. This baseline understanding is everything because it’s the only way to spot deviations that might signal a cyberattack.

The Power of Anomaly Detection

Think of an AI-powered anomaly detection system as a seasoned security guard who knows the daily routine of every single person in the building. They know the accountant, Sarah, always logs in from her Toronto office between 9 a.m. and 5 p.m. and only ever touches financial spreadsheets.

So, what happens if a login for Sarah’s account comes through at 3 a.m. from a different country, attempting to access sensitive client policy files? The AI guard instantly flags this as a strange anomaly. It’s a complete break from her established pattern. This triggers an immediate alert or even an automated response, like locking the account, stopping a potential breach before any real damage is done.

This whole process is driven by machine learning algorithms that are constantly refining their understanding of normal behaviour. The system learns and adapts on its own, getting smarter and more accurate over time. This means fewer false alarms and a much better chance of catching real threats. Of course, when you're using AI to handle sensitive information, you also have to master essential data security compliance strategies to ensure all that data is handled responsibly.

Predictive Analytics: Forecasting Future Threats

Beyond just spotting weird behaviour in the here and now, another key role for AI is predictive analytics. You can think of this as a cybersecurity weather forecast. It uses historical data and global threat intelligence to predict where and how the next storm or cyberattack is likely to hit.

These systems analyze past security incidents, both inside the company and from around the world, to find recurring patterns and new attack methods. By understanding the go-to tactics of cybercriminals, the AI can start to anticipate their next moves.

Predictive analytics lets insurers move from a defensive stance to an offensive one. Instead of just reacting to breaches, they can proactively strengthen the very systems that are most likely to be targeted next.

This kind of foresight is a true game-changer. For example, if the AI notices a global uptick in phishing attacks aimed at claims departments, it can automatically tighten up email filtering for that specific team or suggest they receive extra training.

Automating Responses with Speed and Precision

The final piece of this puzzle is the automated response. When AI identifies a credible threat, it doesn't just ping the IT department and wait for someone to read an email. It can take immediate, pre-approved actions to neutralize the threat in milliseconds.

This speed is crucial. Modern cyberattacks move so fast that they can easily overwhelm human response teams. A machine learning-driven system, on the other hand, can instantly:

Isolate a Compromised Device: If an employee's laptop shows signs of malware, the AI can disconnect it from the network right away to stop the infection from spreading.

Block Malicious IP Addresses: The system can block all traffic from sources known for cybercrime, effectively slamming the door on attackers.

Update Security Rules: AI can dynamically update firewall rules across the entire network to defend against a newly discovered threat.

This combination of anomaly detection, predictive analytics, and automated response creates a powerful, multi-layered defence. You can learn more about how AI and machine learning play a role in transforming security engineering transforms security. It’s this intelligent foundation that makes modern cybersecurity so effective, allowing insurers to protect their data with incredible precision and speed.

Real-World Applications of AI and ML in Cyber Defence

Let's move from theory to the real world and see how AI in the insurance industry is actually beefing up cyber defences. These aren't just futuristic concepts; insurers are using these tools right now to protect their operations, their data, and, most importantly, their customers from a constant barrage of digital threats. Each application of AI and machine learning offers a clear business advantage, whether it's cutting financial losses or simply building more trust with policyholders.

The most obvious and immediate impact is in fraud detection. Insurance fraud bleeds the industry of billions every year, and the old, rule-based systems just can't keep up with today's sophisticated scams. AI and machine learning, on the other hand, can analyze claims as they come in, cross-referencing thousands of data points to spot suspicious activity with incredible accuracy.

Real-Time Fraud Detection and Prevention

Picture this: an AI system is reviewing a new car insurance claim. It’s not just looking at the basic form fields. It's digging deeper, analyzing the claimant's entire history, the specific location of the incident, the photos of the damage, and even the nuances of the language used in the report. It then compares all of this against a massive, historical database of both legitimate and fraudulent claims.

If the machine learning model spots a red flag, maybe the photos show damage that doesn't quite match the accident description, or the claimant has a history of making similar small claims, it immediately flags the case for a human expert to review. This simple step allows investigators to stop chasing payments and start preventing fraud before it happens. It's a powerful application of security engineering that strengthens the entire claims process.

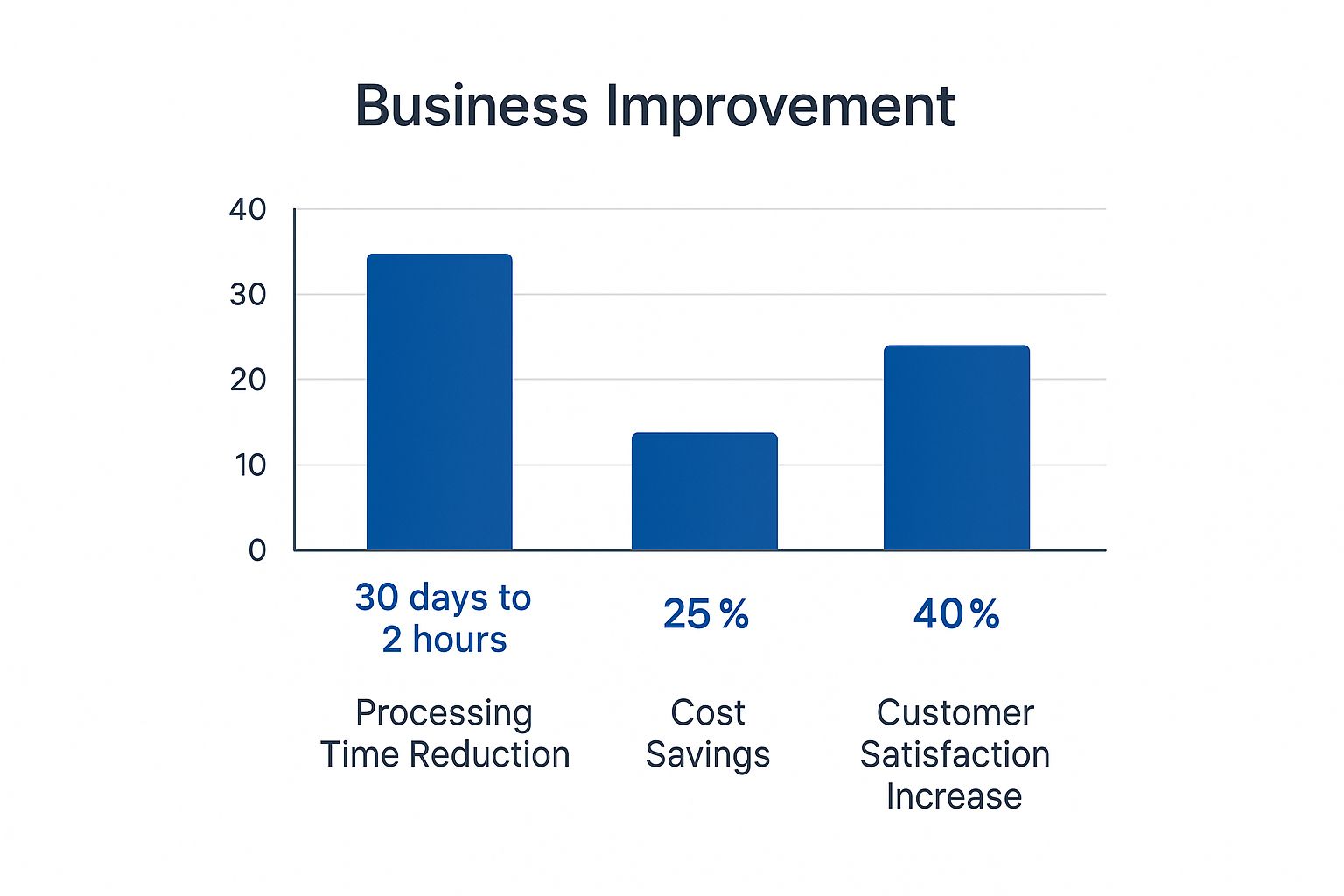

The results of this AI-driven approach are significant. As the image below shows, it can lead to a huge drop in processing time, major cost savings, and even a noticeable bump in customer satisfaction.

It's clear that AI-powered automation isn't just an internal efficiency tool; it creates a better, faster, and more secure experience for everyone involved.

Proactive Threat Intelligence

Another area where AI really shines is in threat intelligence. Instead of just reacting to attacks, AI platforms can actually help forecast emerging cyber threats before they hit the mainstream. These systems are always on, constantly scanning the global threat landscape from dark web forums to new malware signatures and chatter among hacker groups.

This constant vigilance allows them to identify new attack methods and vulnerabilities the moment they appear. For an insurer, this could mean getting an early heads-up about a new strain of ransomware targeting financial services or a clever phishing campaign designed to trick employees using specific software.

By forecasting threats, AI enables insurers to patch vulnerabilities, update security protocols, and train employees on specific risks before attackers even have a chance to launch their campaigns. This proactive posture is fundamental to building genuine operational resilience.

This intelligence-first approach takes the guesswork out of cybersecurity. It helps insurers direct their security budgets where they’ll have the most impact, focusing on the real-world threats that are most likely to cause damage.

Automated Security and Breach Containment

Of course, when a threat does manage to slip past the initial defences, speed is everything. An AI-powered security system can contain a breach in milliseconds, which is infinitely faster than any human team could hope to react. This is the world of Security Orchestration, Automation, and Response (SOAR).

Here's a quick look at how it works in practice:

Detection: An AI monitoring tool spots unusual activity, like a user account suddenly trying to access hundreds of sensitive client files at once.

Analysis: The system instantly assesses the activity, confirms it matches the signature of a known ransomware attack, and pinpoints the compromised account.

Response: Within milliseconds, the SOAR platform triggers a pre-set response. It might automatically lock the user account, quarantine the infected device from the network, and block the malicious IP address, all without anyone lifting a finger.

This rapid, automated response is absolutely critical for minimizing the fallout. It contains the threat before it can spread, protecting sensitive customer data and stopping a minor incident from snowballing into a major crisis.

AI isn't just a global trend; its adoption in the Canadian insurance market is growing quickly and is expected to expand significantly through to 2031. Technologies like machine learning and predictive analytics are at the heart of this shift, driving automation in everything from claims and underwriting to fraud detection. For a deeper dive into these projections, you can discover more insights about Canada's AI insurance market. By putting AI and machine learning to work in these key areas, Canadian insurers aren't just defending themselves; they're building a more secure, efficient, and trustworthy foundation for the future.

Overcoming AI Implementation Hurdles

While the promise of using AI and machine learning to strengthen cybersecurity is compelling, getting there isn't as simple as flipping a switch. The road to successful adoption is often paved with challenges that demand careful planning, serious investment, and a realistic understanding of the potential roadblocks. For Canadian insurers, these hurdles are a mix of thorny technical problems and important ethical questions that need to be tackled head-on.

One of the first hurdles is the sticker shock. AI cybersecurity platforms aren't off-the-shelf products. They require a significant upfront investment in software and hardware, not to mention the specialized talent needed to run them. Finding people who understand both cybersecurity and AI is tough in today's job market, and that scarcity drives up costs.

Then there's the issue of legacy IT systems. Many established insurers are running on infrastructure that's been around for decades. These older systems were never built to talk to modern AI platforms, which creates a huge technical gap. Trying to get a brand-new AI tool to work smoothly with old, rigid infrastructure can be a nightmare, often demanding a lot of custom, expensive development work just to bridge the two worlds.

Navigating Data Privacy and Ethical Mazes

Beyond the tech, insurers have to navigate a complex web of data privacy regulations. AI models are hungry for data, but in Canada, laws like PIPEDA put strict limits on how personal information can be used. Insurers have to be absolutely sure their AI models are trained and operated in full compliance, which means having rock-solid data governance and a deep understanding of their legal responsibilities.

This brings us to the elephant in the room: algorithmic bias. An AI is only as fair as the data it learns from. If the historical data used to train a machine learning model contains hidden biases, say, against certain neighbourhoods or demographics, the AI could end up perpetuating or even amplifying those unfair practices in how it assesses risk or flags fraud.

This is where fairness and transparency become non-negotiable. Insurers must be able to explain how their AI makes its decisions, especially when a customer is negatively impacted. This idea of "explainability" isn't just a nice-to-have; it's fast becoming a regulatory necessity.

Another emerging concern is how AI is starting to shape public perception. One analysis predicts that by 2025, a staggering 21.77% of all Canadian insurance-related online reviews could be generated by AI. This raises serious questions about authenticity and trust. It could easily dilute the value of real customer feedback, as explored in the full findings on how AI is shaping Canadian insurance reviews.

Taming the Beast of Alert Fatigue

One of the more surprising challenges that pops up when implementing a new AI security system is something called "alert fatigue." At first, these systems can be a bit like an overeager puppy, flagging every tiny deviation from the norm. The result? Security teams are swamped with thousands of notifications, and the truly critical threats get lost in the noise.

Learning to manage this is key to making AI a help, not a hindrance. It takes a strategic approach to fine-tune the system properly:

Establish a Clear Baseline: The first step is to teach the AI what "normal" looks like for your specific operations.

Prioritise Critical Alerts: You need to configure the system to rank threats by severity, pushing the most dangerous alerts to the front of the line.

Integrate Human Expertise: Your experienced security pros are your best asset. Use their knowledge to train the machine learning models on what constitutes a false positive, helping them get smarter and more accurate over time.

By carefully tuning these systems, insurers can turn that overwhelming flood of data into a focused stream of genuine, actionable insights. Successfully clearing these hurdles from technical integration and regulatory compliance to ethical oversight and practical system management is what will ultimately unlock the full protective power of AI and machine learning.

A Strategic Roadmap for AI Adoption

Bringing AI into the insurance industry to bolster cybersecurity isn't about just buying the latest software. It's about having a clear, practical plan. For insurers looking to really strengthen their defences, the smartest path forward is a phased approach. The idea is to start small, prove the value quickly, and then build on those successes for a lasting impact on your security.

The whole journey kicks off with an honest look at your current weak spots. You can't protect everything at once, so the first real job is figuring out where your company is most exposed. Are sophisticated phishing scams targeting your claims department? Maybe the risk of an internal data breach keeps you up at night? Pinpointing these critical vulnerabilities helps you aim your initial AI efforts where they’ll count the most.

Phase 1: Identify and Prioritise

Before you spend a single dollar, a thorough internal audit is non-negotiable. This means mapping out all your digital assets, identifying potential entry points for attackers, and getting a real sense of the business impact if a breach happened in each of those areas.

The main goal here is to create a prioritised list of your biggest security headaches. This list becomes the bedrock of your AI strategy, making sure your first projects are both targeted and meaningful.

Vulnerability Assessment: Take a deep dive into your network, applications, and data storage to uncover any existing weaknesses.

Business Impact Analysis: Figure out which systems and data are essential to your operations and what the financial and reputational damage of a compromise would be.

Define Clear Objectives: For each top-priority weak spot, set a specific, measurable goal you want AI to help you achieve (e.g., "cut down successful phishing attacks by 50%").

Phase 2: Launch Targeted Pilot Projects

With your priorities straight, it's time to launch a small, controlled pilot project. Resist the urge to go for a massive, company-wide overhaul right out of the gate. A successful pilot builds momentum, offers priceless learning opportunities, and creates a rock-solid business case for more investment down the line.

Pick one single, well-defined problem from your priority list. For instance, you could roll out an AI-powered email security tool just for your finance department to automatically spot and quarantine advanced phishing threats. The key is to keep the scope tight and the goals crystal clear.

A successful pilot project is your best internal marketing tool. It provides hard data and a clear return on investment, making it much easier to get buy-in from leadership for larger-scale AI initiatives.

While the pilot is running, track everything. Keep a close eye on key performance indicators (KPIs) like the number of threats detected, the reduction in manual security tasks, and the time your team gets back. This data will be absolutely vital for proving the technology's worth.

Phase 3: Build a Data-Centric Culture

For AI to really do its job, it needs good, clean data. That means building a culture where data is treated like the strategic asset it is, right across the entire organization. This shift is a huge part of the broader digital transformation in insurance, and it often means breaking down the data silos that exist between departments.

Getting your teams up to speed is another massive piece of the puzzle. Your security analysts need to know how to work alongside AI tools, make sense of their outputs, and give feedback that makes the machine learning models smarter over time. Not everyone needs to become a data scientist, but a solid, foundational understanding of AI is essential.

Phase 4: Establish Robust Governance and Scale

As you graduate from successful pilots to wider rollouts, strong governance becomes absolutely critical. You need a framework that lays out clear policies for how AI is used, who's accountable for its performance, and how you'll ensure it operates ethically and without bias.

Canadian insurance leaders are definitely showing an appetite for this kind of tech. A recent survey found that 78% plan to increase their technology budgets, with 36% naming AI as their top innovation priority. Still, many are moving carefully; 30% of carriers and 41% of agencies are still in the early exploration stages. You can get more insight into this cautious but optimistic trend by exploring the 2025 insurance tech trends on wolterskluwer.com.

With a solid governance framework in place, you can start to scale your wins. Take what you learned from your pilot projects and apply it to other high-priority areas. This methodical, step-by-step approach ensures that your use of AI in the insurance industry grows in a way that’s sustainable, responsible, and incredibly effective.

The Future of AI and Cybersecurity in Insurance

Looking ahead, the partnership between artificial intelligence and cybersecurity is only going to get tighter, completely changing how the insurance industry protects itself. The conversation has shifted dramatically. It's no longer a question of if insurers should adopt AI, but how fast and how well they can weave it into their security DNA. Using AI and machine learning for cyber defence has gone from a nice-to-have to an absolute must.

This isn't just an upgrade; it's the start of a new chapter where defence becomes predictive, not just proactive. AI is the engine that lets security teams get ahead of threats, stopping them before they cause real damage, and learning from every single interaction to get smarter over time.

The Dawn of Autonomous Security

The next big leap? Fully autonomous security systems. Picture a digital immune system for an insurance company, one that can spot, isolate, and shut down threats all on its own. Human experts would only need to step in for the most high-level strategic calls. These systems won't just react in a split second; they'll be able to patch themselves and reshape network defences on the fly.

Another huge development on the horizon is quantum computing. It’s still early days, but quantum computers could one day shatter today's encryption standards. This is where AI will become indispensable, helping to create new, quantum-resistant encryption methods to keep sensitive policyholder data safe from tomorrow's threats.

The ultimate goal is to build a security ecosystem that is not only resilient but also self-improving. For Canadian insurers, this means viewing strategic AI adoption as more than just a defensive measure. It is a powerful competitive advantage that fosters trust and ensures long-term stability.

As things continue to evolve, offering thorough cyber insurance training is crucial. It helps both insurers and their clients get a handle on the new risks and the protections available. Leaning into these future trends is how insurers will build a more secure, resilient, and trustworthy foundation for the entire industry.

Frequently Asked Questions

It’s natural to have questions when you’re looking at bringing new technology like AI and machine learning into the world of insurance cybersecurity. Let's dig into some of the most common ones and get you some straight answers.

How do AI and Machine Learning Actually Spot Insurance Fraud?

Think of old fraud detection systems as simple tripwires. They could only catch someone who broke a very specific, pre-set rule. AI and machine learning are completely different. They act more like a team of seasoned detectives, constantly learning and connecting the dots in ways a human just can't, at least not at that scale.

Machine learning algorithms dive into vast oceans of data in real-time, claim details, customer history, external reports, and even the metadata in photos. The system builds an incredibly detailed picture of what a "normal" claim looks like. It then flags the weird stuff, the outliers that don't quite fit. Maybe a claimant's written description doesn't match the damage in the photos, or perhaps there's a faint but suspicious link to a dozen other claims. It’s this knack for spotting subtle, complex patterns that makes AI so effective against modern fraud schemes.

What’s a Good First Step for a Small Agency to Use AI for Security?

Jumping into AI security doesn't mean you have to hire a team of data scientists and build something from the ground up. For a small insurance agency, the best and most practical first move is to adopt cloud-based security tools that already have AI and machine learning baked right in.

Focus on services that deliver immediate value, like:

Smarter Email Filtering: These systems use AI to catch sophisticated phishing scams and malware that would easily trick older, rule-based filters.

Next-Gen Endpoint Protection: This is about securing your team's laptops and phones. Modern endpoint security uses AI to spot and stop threats on a device before they can ever reach your network.

By starting with these kinds of AI-powered tools, a smaller agency can get a massive security upgrade without a massive investment. You're basically renting the expertise that's already built into the platform.

For smaller firms, the goal isn't to build AI. It's to choose services where AI is already working behind the scenes to give you a much higher level of protection.

Can AI Completely Replace Human Cybersecurity Experts?

Not a chance. Think of AI as an incredibly powerful partner for your human security team, not a replacement. It’s a classic case of human-machine collaboration. AI and your experts are simply good at different things.

AI and machine learning are brilliant at handling the mind-numbing volume of data and alerts that flood in every single day. They can monitor networks and sift through logs 24/7 without getting tired or bored, automating the routine work. This frees up your human experts to do what they do best: investigate complex threats, think strategically about your defences, and make those critical judgment calls that require real-world context and experience.

In short, AI does the heavy lifting, which lets your people be more effective and focused.

At Cleffex Digital Ltd, we build advanced software solutions that help businesses in the insurance sector and beyond tackle their biggest technology challenges. Find out how our expertise can strengthen your operations by visiting us at Cleffex.