Artificial intelligence is quickly becoming a vital tool for medical professionals right across Canada. Think of it not as a replacement for human doctors, but as a tireless digital partner, helping clinicians diagnose diseases sooner, see patterns the human eye might miss, and build personalized treatment plans with incredible accuracy. It’s less about taking over and more about augmenting a doctor’s skills, much like a co-pilot assists a pilot in the cockpit.

As we integrate these powerful tools into our healthcare system, we face a critical challenge: how do we harness the immense potential of AI in healthcare while upholding the ethical principles that form the bedrock of medicine?

The New Digital Partner in Canadian Healthcare

Bringing AI into healthcare marks a genuine shift in how we deliver medical services. Picture an algorithm that can sift through thousands of medical images in just minutes, flagging tiny abnormalities that could signal the earliest stages of a disease. This gives doctors a chance to step in much earlier, which often leads to far better outcomes for patients.

This digital partnership goes beyond just diagnostics, too. AI is helping to handle the administrative load that often leads to physician burnout, freeing up doctors to spend more quality time with their patients and on complex medical decisions. Certain technologies, like Natural Language Processing (NLP) applications, are already changing how we manage and make sense of healthcare data.

A Rapidly Growing Field

The momentum here is undeniable. The AI healthcare market in Canada is on a steep upward trajectory, expected to jump from $1.1 billion CAD in 2023 to $10.8 billion by 2030. That’s a compound annual growth rate of about 37.9%, a pace that's even a bit faster than what's projected for the United States.

While this fast-paced adoption highlights the incredible potential of AI, it also forces us to confront some serious ethical questions. As we weave these powerful tools into our healthcare system, we have to tackle these concerns head-on to maintain trust and keep patients safe.

As AI becomes more woven into clinical workflows, the central challenge becomes clear: How do we foster groundbreaking innovation while rigorously upholding our core patient values and ethical standards?

The Core Ethical Dilemma

At its heart, the issue is about balancing progress with responsibility. The very data that fuels medical breakthroughs needs to be protected with absolute commitment. To get the full picture, it's worth looking at the many ways AI is currently making a difference.

This guide will walk through the crucial ethical considerations that come with this new digital partner. We'll explore how Canada can be a leader in AI innovation while making sure every solution is safe, fair, and puts the patient’s well-being at its centre.

Navigating the Thorny Ethical Questions of Medical AI

As we weave AI in healthcare more deeply into the fabric of daily clinical work, we're forced to confront a host of complex ethical questions. The potential for good is undeniable, but we have to tread carefully, addressing the tough challenges head-on to make sure this technology serves patients safely and equitably.

These aren't just technical glitches we can patch over. They cut to the very heart of trust, fairness, and responsibility in medicine. Getting a handle on them is the first real step toward creating a future where innovation and our ethical duties can move forward together.

Patient Data Privacy: The Fuel of AI

Think of patient data as the high-octane fuel that powers every medical AI system. These algorithms are completely dependent on massive amounts of information, everything from lab results and medical scans to personal health histories. Without it, they can't learn, they can't improve, and they can't function. This creates a monumental responsibility to safeguard some of the most private information a person has.

Every single data point used to train an AI model is tied to a real person, their story, and their fundamental right to privacy. The tightrope we have to walk is using this data for the greater good, like creating smarter diagnostic tools, without ever putting individual confidentiality at risk. A single data breach could shatter patient trust, not just in one clinic, but in the entire digital health ecosystem.

This balancing act is absolutely central to rolling out AI in healthcare ethically. It demands rock-solid security and transparent policies that dictate exactly how data is gathered, stored, and used. Privacy can't be an afterthought; it has to be baked in from the very beginning.

Algorithmic Bias: A Hidden Danger

Here’s a hard truth: an AI system is only as unbiased as the data it learns from. If that data reflects the historical inequities or societal biases already present in our healthcare system, the AI will not only learn those prejudices but can actually magnify them. This is what we call algorithmic bias, and it represents a profound threat to providing fair care for everyone.

For instance, if a diagnostic algorithm is trained almost exclusively on data from one demographic, it might perform poorly for patients from underrepresented groups. This could easily lead to missed diagnoses or delayed treatments for the very people who often face the biggest health challenges.

Algorithmic bias isn't usually a deliberate act of prejudice. It's an unintentional, but deeply harmful, consequence of skewed data. It risks creating a two-tiered system of care, where the benefits of AI flow to some communities but not others.

Fixing this requires a conscious, dedicated effort to engineer fairness into these systems right from the start. That means:

Diversifying Datasets: We have to actively hunt for and include data from the widest possible range of populations. The goal is to build a model that truly reflects the diversity of the patients it will eventually serve.

Regular Audits: It's not a one-and-done process. Algorithms need to be constantly tested for biased outcomes, with ongoing adjustments to make them fairer.

Inclusive Development Teams: Putting people with different backgrounds and life experiences on the development teams is key. They can spot cultural blind spots and biases long before they get coded into the technology.

The Accountability Puzzle

When an AI system contributes to a medical error, who’s on the hook? This question of accountability is perhaps the trickiest ethical puzzle of all. Is it the software developer who wrote the algorithm? The hospital that bought and implemented the tool? Or the clinician who ultimately trusted the AI's recommendation?

The answer is seldom simple. Medical decisions that involve AI are a partnership between human and machine. The AI offers an analysis or a suggestion, but a human doctor makes the final call. This shared responsibility can make it incredibly difficult to pinpoint accountability when something goes wrong.

Establishing clear lines of responsibility is absolutely vital for patient safety and for building lasting trust in AI tools. Without a clear framework, doctors might be hesitant to adopt powerful new technologies, and patients could be left with no clear path to justice if an error occurs. Figuring out who is answerable is a critical piece of responsibly integrating AI in healthcare.

Protecting Patient Privacy in the Age of AI

The engine that drives any meaningful AI in healthcare runs on a single, precious fuel: patient data. Every algorithm learns and improves by analyzing vast datasets, which creates a delicate balancing act. On one side, we have the incredible potential for medical breakthroughs. On the other hand, we have the fundamental right of every individual to privacy.

This tension is the central ethical challenge we face. The goal is to unlock the collective power of health information without ever compromising the confidentiality of a single person. To manage this, healthcare organizations have long relied on anonymization, the process of stripping out personally identifiable details like names, addresses, and health card numbers.

But anonymization isn't the perfect shield it once was.

The Limits of Anonymization

In a world saturated with data, the risk of re-identification is very real. Even after direct identifiers are removed, it’s sometimes possible to connect the dots and figure out who a person is. By cross-referencing "anonymous" medical data with other publicly available information, like social media or census records, a person's identity can be reconstructed. True, foolproof anonymity is becoming harder and harder to guarantee.

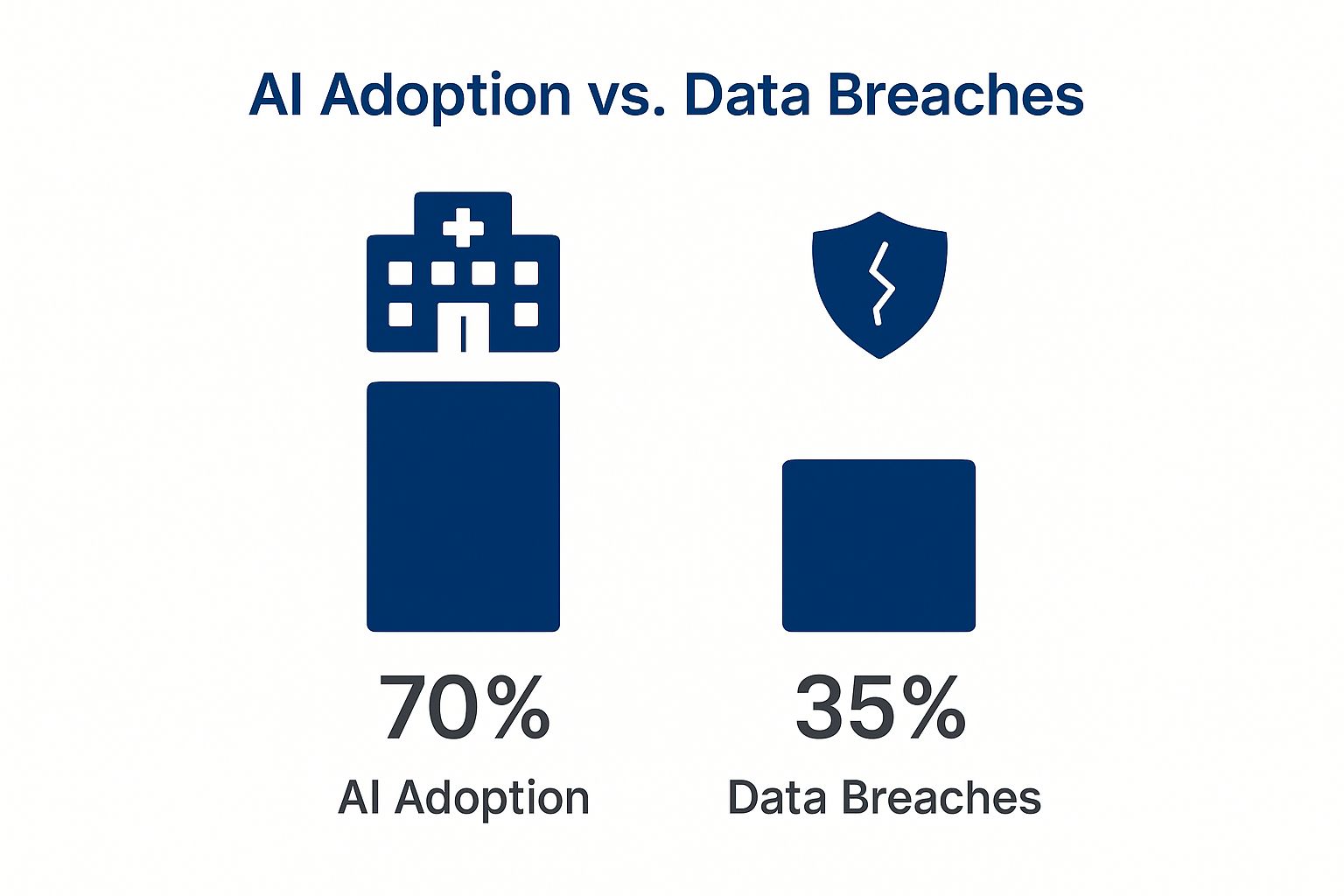

The numbers tell a compelling story. While AI adoption is surging in healthcare, data security is struggling to keep pace, creating a significant risk.

This image paints a clear picture: as 70% of healthcare providers embrace AI, a startling 35% of those systems have already faced a data breach. This gap shows that while we're eager to innovate, we need to double down on protecting the very data that makes it possible. Building more secure systems from the ground up is essential, and leaning on established software security best practices is a great place to start.

Protecting patient data isn't just a technical challenge; it's a strategic necessity. Several methods have emerged to help organizations train effective AI models while keeping sensitive information secure.

Data Protection Strategies in Healthcare AI

Protection Method | How It Works | Key Benefit | Primary Limitation |

|---|---|---|---|

Anonymization | Removes direct identifiers (name, address, etc.) from datasets. | Simple to implement and widely understood. | High risk of re-identification when combined with other data sources. |

Pseudonymization | Replaces direct identifiers with artificial ones, or “pseudonyms.” A key is kept separately to re-link if needed. | Allows for data tracking and longitudinal studies without exposing direct personal info. | The re-identification key is a single point of failure; if compromised, privacy is lost. |

Differential Privacy | Adds mathematical “noise” to a dataset, making it impossible to tell if any single individual’s data is included. | Provides a strong, mathematical guarantee of privacy. | The added noise can sometimes reduce the accuracy or utility of the AI model. |

Federated Learning | Trains the AI model directly on local devices (e.g., a hospital’s server) without the raw data ever leaving. | Data stays secure and private at its source, greatly reducing breach risk. | Complex to set up and requires significant computational resources at each location. |

Each of these strategies offers a different trade-off between data utility and privacy. The right approach often involves a combination of these methods, tailored to the specific use case and the sensitivity of the data involved.

Navigating Canadian Privacy Laws

Here in Canada, federal laws like the Personal Information Protection and Electronic Documents Act (PIPEDA) lay down the rules for how private-sector organizations must handle personal information. These regulations are not just for traditional files; they apply directly to the AI technologies used in healthcare, ensuring patient data is collected, used, and shared only with proper consent and strong safeguards.

Ethical data stewardship is non-negotiable. Without it, public trust evaporates, and the entire foundation of AI in healthcare could crumble. Patients must feel confident that their most sensitive information is being handled with absolute care.

This brings us to another tricky ethical puzzle: informed consent.

The Challenge of Informed Consent

Traditionally, "informed consent" meant a patient understood exactly how their information would be used for a specific purpose. AI throws a wrench in that. An algorithm might discover completely new and unforeseen uses for a patient's data years after it was collected.

So, how can someone give truly "informed" consent for applications that don't even exist yet?

To tackle this, the healthcare community is exploring more dynamic and transparent consent models. The idea is to give patients ongoing control over their data and provide clear, continuous information about how it’s contributing to medical science. Finding that perfect balance is key to ensuring that AI in healthcare moves forward in a way that is both incredibly effective and deeply respectful of every individual.

Unmasking and Correcting Algorithmic Bias

While the promise of AI in healthcare is immense, we have to talk about a serious risk hiding in the code: algorithmic bias. This isn't just a simple technical glitch. It's a complex problem that could actually make existing health disparities worse, completely undermining the goal of fair and equal care.

So, what is it? At its core, algorithmic bias is when an AI system’s results consistently and unfairly favour one group of people over another. This usually happens because the AI learned from data that already contained historical and societal biases. The algorithm doesn't create prejudice out of thin air, it learns and even amplifies the biases we feed it.

Think about a diagnostic tool designed to spot skin conditions. If it was trained mostly on images of lighter skin, that tool will almost certainly struggle to identify diseases on darker skin. This isn't a hypothetical problem; it's a documented reality that can lead to missed diagnoses and delayed treatment for entire communities. It’s a perfect example of how good intentions can go wrong if we aren’t careful.

Identifying the Roots of Bias

Algorithmic bias can sneak into healthcare AI from several directions, and it’s rarely intentional. Figuring out where it comes from is the first, most critical step to fixing it.

Here are the usual suspects:

Unrepresentative Training Data: If the data used to train an AI model doesn't accurately reflect the diversity of the real world, whether by race, gender, income, or location, the AI will naturally perform poorly for the underrepresented groups.

Historical Inequities: Medical data is often a mirror of past societal problems. For instance, an AI designed to allocate healthcare resources might inadvertently penalise historically underserved neighbourhoods if it's trained on old data reflecting those very inequities.

Lack of Diversity in Development Teams: When the people building the technology come from similar backgrounds, they can have blind spots. They might not even think to ask how an AI tool could affect communities different from their own.

These issues can stack up, creating AI systems that, despite being built to help, end up making inequality worse.

Bias in healthcare AI is not merely an inconvenience; it is a critical safety issue. An algorithm that makes systematically worse recommendations for one group of people is not just unfair; it is dangerous and undermines trust in medical technology.

Proactive Solutions for Fairer AI

The good news is that the healthcare community is well aware of the problem and is actively working on solutions. The focus is shifting from just pointing out bias to building fairness directly into the DNA of medical AI from the very beginning.

This means taking a multi-pronged approach that's all about diligence and inclusivity. Here in Canada, we're seeing real momentum. By 2025, some of our AI-assisted diagnostic tools will have hit accuracy rates over 90% in medical imaging. This progress is supported by a hefty CAD 443 million investment through the Pan-Canadian AI Strategy, which doesn’t just focus on business and talent but also on creating standards for responsible AI. You can read more about these Canadian breakthroughs on canadiansme.ca.

The goal is to build a future where accuracy and equity go hand-in-hand. Key strategies to get there include:

Diversifying Datasets: We need to actively find and include high-quality data from a wide range of populations. It's the only way to train models that truly work for everyone.

Conducting Algorithmic Audits: Think of this as regular, rigorous stress-testing. By constantly checking AI systems for biased outcomes, we can catch and fix problems before they do any harm in a real clinic.

Building Inclusive Teams: Assembling development teams with diverse backgrounds, experiences, and perspectives helps spot potential biases long before a tool is ever deployed.

By putting these solutions into practice, we can move toward AI systems that don't just advance medicine but also help create a fairer, more equitable healthcare future for every single person.

Putting Ethical AI into Practice

It’s one thing to talk about ethical principles, but it’s another to see them working in the real world. The good news is that ethical AI in healthcare isn't just a lofty goal; it’s already happening in Canadian clinics. Across the country, we’re seeing fantastic examples of how innovation and responsibility can go hand-in-hand to improve life for both patients and clinicians. These aren't just hypotheticals; they prove we can adopt powerful new tools without sacrificing our core values.

One of the most compelling stories comes from the front lines of medicine, tackling a problem that has plagued the industry for years: physician burnout. The culprit? An overwhelming mountain of administrative work.

Easing the Burden with AI Scribes

Imagine a doctor’s visit where the physician can give you their undivided attention, without once turning to a computer. That's the promise of ambient AI scribe technology. This tool acts like a silent, intelligent assistant in the room, listening to the natural conversation between doctor and patient and automatically generating the clinical notes.

The impact has been massive, especially in Ontario, where the province is leading the charge in bringing this tech to primary care. An evaluation of over 150 clinicians revealed that these AI scribes can cut documentation time by an astonishing 70-90%. It’s no surprise that 83% of the doctors involved wanted to keep using the software.

As of early 2025, more than 10% of Ontario's primary care doctors are already on board, with a goal to get that number to 50% by the spring of 2026. If you want to dive deeper into this initiative, the C.D. Howe Institute report on AI in healthcare has all the details.

This technology is a perfect example of ethical AI in action. It’s designed with strong privacy safeguards from the ground up to protect sensitive patient information. Of course, integrating these tools effectively requires careful planning, which is a crucial part of developing custom medical software.

Keeping a Human in the Loop

Another powerful example of responsible AI comes from radiology. Today's AI algorithms are remarkably skilled at analyzing medical images like X-rays and CT scans, often spotting subtle signs of disease that the human eye might miss. But what about the risk of an error? Even a tiny mistake could have huge consequences, which is why we can't leave the final call to a machine.

This is where the "human-in-the-loop" model is essential.

Think of the AI as a highly skilled assistant. It scans the image, highlights potential areas of concern, and offers a preliminary analysis. The final diagnosis, however, is always made by a qualified human radiologist, who uses the AI's input to augment their own expertise.

This collaborative approach is a win-win, delivering several key benefits:

Improved Accuracy: You get the best of both worlds, the incredible pattern-recognition power of AI combined with the nuanced clinical judgment of an experienced doctor.

Enhanced Safety: It provides a critical backstop, ensuring a human expert verifies every AI-generated finding before it influences a patient's treatment.

Clear Accountability: The line of responsibility is never blurred. The human clinician is, and always remains, accountable for the final decision.

From AI scribes that give doctors their time back to human-in-the-loop systems that make diagnoses safer, these real-world applications show that ethical AI in healthcare is not some far-off dream. It's here, and it’s proving that we can innovate without compromising our commitment to patient care.

Building a Future of Responsible AI in Healthcare

Getting AI in healthcare right isn't a race to a finish line. It’s more like a constant balancing act, where we have to weigh incredible progress against fundamental principles. The big ethical hurdles, like privacy, bias, and accountability, demand our constant attention.

To move forward responsibly, we need a coordinated effort from everyone involved. This is about making sure the technology always serves people first. It means building on a few core strategies that aren't just nice-to-haves; they're the essential building blocks for an AI-powered healthcare system that Canadians can actually trust.

Core Strategies for Success

If we want AI to improve care safely and for everyone, there are four areas we absolutely have to get right.

Strong Regulatory Frameworks: We need clear, flexible rules from policymakers that protect patients without killing innovation. These regulations must set firm boundaries for data privacy, spell out who is accountable when things go wrong, and demand transparency in how AI tools are used to make clinical decisions.

Transparent Algorithms: One of the biggest roadblocks to trust is the "black box" problem, when nobody, not even the developers, can fully explain why an AI made a certain decision. We have to champion explainable AI (XAI) so that doctors can understand the why behind a recommendation. Only then can they use these tools with real confidence.

Vigilant Bias Monitoring: Fixing bias in an algorithm isn't a one-and-done job. Healthcare organizations need to commit to ongoing audits of their AI systems. This is the only way to catch and correct biases as they appear, ensuring the system delivers fair outcomes for all of our diverse communities.

Unwavering Patient-Centred Care: At the end of the day, every single AI application has to be measured by one simple standard: does it make things better for the patient? Technology must always be a tool that supports the vital human connection between patients and their caregivers, never something that replaces it.

Shaping a future where AI and ethics coexist requires a shared conversation. It is a collective responsibility that falls on the shoulders of policymakers, developers, clinicians, and patients alike. Every voice is needed to guide this technology toward its most promising potential.

This is a call to action for everyone with a stake in the future of Canadian healthcare. By taking an active part in this discussion, we can ensure that AI is built not just for the sake of innovation, but with a deep, unwavering commitment to keeping people safe, treating them equitably, and upholding our ethical responsibilities.

Answering Your Questions About AI in Healthcare

As AI tools become more common in clinics and hospitals, it’s completely normal to have questions. This technology is incredibly exciting, but it also raises important points about privacy, safety, and what it means for the future of medicine.

Let's break down some of the most common questions people have about artificial intelligence and its growing role in our healthcare.

Is My Health Information Safe with AI?

This is, without a doubt, the top priority. Protecting patient privacy is non-negotiable. While no system is ever 100% foolproof, AI in healthcare is built on a foundation of strict regulations, like Canada's PIPEDA, which legally requires that personal health information be kept secure.

To keep data safe, healthcare organizations layer their defences. It's not just one lock on the door.

Data Anonymization: This involves stripping away personal details like your name or address from your health data so it can't be traced back to you.

Federated Learning: Think of this as training the AI model right inside the hospital's own secure system. The raw data never has to leave the premises, which dramatically cuts down on the risk of it being exposed.

Strict Access Controls: Only a handful of authorized people can access sensitive data, and everything they do is logged and tracked.

Will AI Replace Doctors and Nurses?

This is a big one, but the short answer is no. The goal isn't replacement; it's teamwork. AI is best thought of as a highly skilled partner for medical professionals. It takes on the time-consuming, repetitive work, which frees up doctors and nurses to focus on what humans do best: thinking critically, connecting with patients, and making complex judgment calls.

A good example is in radiology. An AI can sift through thousands of medical scans in a fraction of the time it would take a person, flagging anything that looks suspicious. But the final call? That always comes from a human radiologist who can apply their years of experience and understand the full context of the patient's health. AI does the heavy lifting, but the human expert makes the crucial decision.

How Accurate Are AI Diagnostic Tools?

In certain specific tasks, AI's accuracy can be stunningly high, sometimes even better than a human. For instance, some models have hit accuracy rates of over 90% when it comes to identifying specific types of cancer from images.

But that accuracy comes with a big "if." An AI is only as good as the data it was trained on. If that data is biased or doesn't represent a diverse population, the tool simply won't perform well in the real world.

That’s why the "human-in-the-loop" model is so essential. A trained medical professional must always be there to review and validate what the AI suggests. This creates a powerful safety net where you get the best of both worlds: the precision of technology and the wisdom of human expertise.

What Happens If an AI Makes a Mistake?

Figuring out who's responsible when an AI is involved in a medical error is one of the toughest ethical puzzles we're facing right now. It’s rarely a simple case of pointing the finger at one person or thing.

The legal and ethical rules are still catching up to the technology, but responsibility could be shared among several parties:

The developer who designed and built the AI algorithm.

The hospital or clinic that chose to use the tool.

The doctor or nurse who ultimately acted on the AI's output.

Creating clear, fair guidelines for accountability is a major focus for regulators. It's absolutely critical for ensuring patient safety and building lasting trust in these powerful new tools.

At Cleffex Digital Ltd, we focus on building secure and compliant AI-driven solutions that solve real-world problems in healthcare. We have deep experience in innovative technology and agile practices, helping clinics, hospitals, and life sciences startups confidently step into the future of medicine. Find out how we can help you tackle your biggest business challenges by visiting us at https://www.cleffex.com.