When we talk about artificial intelligence in healthcare data security, we're not just talking about another piece of software. We're talking about using smart, learning algorithms to get ahead of threats, automate the painstaking work of compliance, and build a fortress around sensitive patient information. It’s a fundamental shift from playing defence to building a predictive, intelligent security strategy that modern healthcare desperately needs.

The New Frontline in Protecting Patient Data

As Canadian healthcare organisations bring AI into the fold to improve patient outcomes, they’re also unintentionally opening new doors to sophisticated cyber threats. This creates a tricky situation: how do you embrace these powerful new tools while still protecting data that often lives on ageing IT infrastructure? It’s a bit like installing a high-tech digital lock on an old, weathered wooden door – the lock itself is strong, but the frame is still a vulnerability.

This paradox blows the digital attack surface wide open. Every new AI-powered tool, whether it’s a diagnostic algorithm or an administrative chatbot, creates a new potential entry point for attackers to exploit. This doesn't just increase the odds of a data breach; it makes navigating the complex web of regulations a much bigger headache.

The Dual Role of AI in Data Security

There's no denying that AI is being adopted at a breakneck pace. In a 2025 survey, a staggering 87% of Canadian IT decision-makers in healthcare confirmed they were already using AI for patient care. Yet, this progress comes with a catch. The same survey found that 53% of respondents now see security as their number one IT concern, a fear amplified by the fact that 99% of organisations are still running on legacy systems that introduce security risks.

This is where you can see the two faces of AI. If rolled out carelessly, it can introduce new weaknesses. But when implemented correctly, AI is also our most powerful weapon against modern cyber threats, offering capabilities that traditional security tools just can’t replicate. A solid defence for patient data requires not only smart AI solutions but also a solid foundation built on advanced cybersecurity frameworks.

AI isn’t just another tool in the security arsenal; it's the intelligent watchtower that can see threats forming on the horizon, long before they reach the castle walls. It provides the foresight needed to protect patient data in a constantly shifting threat environment.

A Roadmap to Intelligent Protection

Knowing how to properly bring AI into your security strategy is everything. This guide is meant to be a clear roadmap for healthcare and enterprise buyers, walking you through the entire journey from basic concepts to hands-on implementation.

We'll break down:

-

Intelligent Threat Detection: See how AI algorithms can spot and neutralise threats in real-time, moving at a speed no human team could ever match.

-

Automated Compliance: Understand AI's role in making adherence to regulations like PIPEDA and HIPAA smoother, cutting down on manual work and the potential for human error.

-

Practical Implementation: Get a step-by-step approach for weaving AI security measures into the infrastructure you already have. For more on this topic, you can read about the importance of cybersecurity in the healthcare industry.

By working through this guide, your organisation can move forward with AI confidently, turning what could be a vulnerability into your most powerful security asset.

How AI Detects and Prevents Cyber Threats

To get a real sense of how AI works in healthcare data security, picture a hospital’s digital network as a bustling city. Your traditional security tools, firewalls and antivirus software are like security guards posted at the main gates. They're pretty good at checking IDs against a list of known troublemakers, but they can’t catch someone with a clever disguise or a thief who slips in through an unguarded back alley. This is where AI completely changes the game.

AI is less like a guard and more like an intelligent surveillance system that learns the entire city's rhythm. It doesn't just scan for known bad guys; it builds a deep understanding of what normal, everyday activity looks like. It learns which doctors typically access certain patient files, what time of day data is usually transferred, and from which specific devices.

This process is called anomaly detection, and it's the heart of any AI-powered defence. By sifting through millions of data points in real time, the system can instantly flag any activity that deviates from that established baseline.

Unmasking Threats With Behavioural Analysis

When an AI security system spots an anomaly, it doesn't just stop there; it digs deeper. For instance, imagine a doctor’s login credentials are suddenly used to access thousands of patient records at 3 a.m. from an unrecognised location. The AI immediately identifies this as suspicious behaviour. It’s not about a single rule being broken; it’s the whole context and pattern of the action that raises a red flag.

This is a world away from traditional systems, which often only react after malware has been deployed or data has already been stolen. AI’s proactive approach allows it to step in before any real damage is done, neutralising threats that older security models would have missed entirely.

These AI systems are particularly good at stopping modern threats, such as:

-

Zero-Day Exploits: These attacks target brand-new software vulnerabilities before developers can even create a patch. Because there's no known signature, traditional antivirus is blind to them. AI, however, can spot the strange behaviour they cause.

-

Advanced Persistent Threats (APTs): These are stealthy, long-term attacks where hackers slowly and quietly infiltrate a network. An AI can detect the subtle, "low-and-slow" activities linked to APTs that might otherwise go unnoticed for months.

As we've explored before, using artificial intelligence for threat detection is about creating a defence that learns and adapts on its own. It continuously refines its understanding of what a threat looks like, evolving right alongside the attackers' tactics.

From Detection to Automated Response

Spotting a threat is only half the battle. The real strength of AI in healthcare data security is its ability to respond instantly and automatically. When a high-risk anomaly is detected, the AI can take immediate action without waiting for a human to intervene, and in a cyberattack, every second counts.

For example, an AI system could instantly:

-

Isolate an Endpoint: Automatically quarantine a compromised device from the rest of the network to stop malware from spreading.

-

Block User Access: Temporarily revoke credentials that are behaving erratically to stop a potential data breach in its tracks.

-

Flag for Review: Alert the human security team with a detailed incident report, providing crucial context and recommending next steps.

By automating these initial responses, AI drastically shrinks the window of opportunity for attackers. This speed is absolutely critical in healthcare, where the average cost of a data breach is over $9.77 million per incident.

Building these intelligent systems requires specialised expertise. This is why many organisations partner with firms offering custom healthcare software solutions to integrate AI-driven security directly into their existing infrastructure. This ensures the technology is properly fitted to the unique workflows and compliance needs of the healthcare environment, transforming security from a reactive chore into a proactive, intelligent shield for patient data.

Practical Use Cases for Securing Patient Data

So, we've talked about how AI can spot threats. Now, let's get into the nitty-gritty of how it’s actually being used on the ground to build a stronger, more intelligent security framework. These aren't just theoretical ideas; these applications are actively protecting sensitive patient information every single day.

Smarter Access Control

One of the most immediate upgrades AI brings is to access control. For years, we've relied on static credentials – a username and a password. The problem? They can be stolen, shared, or cracked. AI offers a far more dynamic and intelligent alternative.

Instead of just checking a password, an AI-driven system analyses a user’s behaviour in real-time. It learns the typical login times, the usual locations, and the types of data each person accesses, building a unique digital fingerprint for them. If someone's actions suddenly veer off-script, like a nurse accessing patient records from a different country at 3 a.m., the system can instantly flag the activity, require extra verification, or even lock the account. It's about stopping a breach before it even gets started.

Advancing Privacy with New AI Techniques

Another game-changing area is privacy-preserving machine learning (PPML). Healthcare organisations are sitting on mountains of incredibly sensitive data, and using it for research or training new AI models creates a huge privacy risk. This is where techniques like federated learning come in, and frankly, they're brilliant.

Here's how it works: instead of pooling all that sensitive patient data into one central server for an AI model to train on, federated learning flips the script. It sends the model to the data. The algorithm learns locally on servers within different hospitals, and only the anonymous, aggregated model updates are sent back – never the raw patient data. This allows for powerful, collaborative medical research without ever exposing patient information.

Think of it like a team of top chefs collaborating on a secret recipe. Instead of shipping all their rare, priceless ingredients to one central kitchen (a huge risk!), each chef works on a part of the recipe in their own secure kitchen. They then share only their cooking instructions, not the ingredients themselves. The final masterpiece is created without the core ingredients ever leaving their safe homes.

Building these kinds of advanced systems isn't simple; it requires a deep understanding of both clinical workflows and sophisticated software architecture. That’s why many organisations work with partners who specialise in building custom healthcare software solutions that bake these privacy-first features in from the start.

Automating Data Classification and Management

Let's be honest, not all data is created equal. A patient's name is sensitive, but their entire genomic sequence is on another level. Manually classifying all this information is a monumental task, and human error is always a risk.

AI is incredibly good at this. It can automatically scan, understand, and classify data based on its content and context, applying the right security tags and handling policies without anyone lifting a finger. This automation ensures that the most critical information always gets the highest level of encryption and the tightest access controls. It also makes life easier for compliance, since data is consistently managed according to regulations. This kind of precise, automated control is a key part of effective cybersecurity compliance solutions.

To really grasp the shift, it helps to see the old way and the new way side-by-side.

Traditional Security vs AI-Powered Security in Healthcare

The move from conventional data security to AI-driven approaches marks a fundamental improvement in how we protect patient information. The following table highlights just how significant these changes are in terms of efficiency, accuracy, and proactivity.

| Security Function | Traditional Approach | AI-Powered Approach |

|---|---|---|

| Access Control | Relies on static credentials (passwords, key cards). | Uses dynamic, behaviour-based authentication that adapts to user actions in real-time. |

| Data Handling | Requires manual classification and policy enforcement, which is prone to human error. | Automates data classification and applies security policies based on content sensitivity. |

| Model Training | Centralises sensitive data, creating a high-value target for attackers. | Employs federated learning to train models on decentralised data, keeping PHI secure. |

This evolution is a massive leap forward. By putting AI in charge of these critical functions, we not only get stronger security but also free up our IT teams to focus on bigger-picture initiatives. Integrating this intelligence is now a core part of modern software development services, ensuring security is designed in, not just bolted on as an afterthought. As we explored in our custom software solutions guide, a strategic approach is fundamental to getting this right.

Ultimately, these real-world examples show that AI isn't just a defensive shield; it's a proactive guardian that intelligently manages access, preserves privacy, and organises data to create a genuinely secure healthcare environment. Of course, building this environment means navigating a complex web of regulations, which is exactly what we'll dive into next.

Automating Compliance in a Complex Regulatory Landscape

Anyone working in Canadian healthcare knows just how tangled the web of privacy regulations can be. Between federal laws like PIPEDA, various provincial privacy acts, and sometimes even the cross-border demands of HIPAA, just staying compliant can feel like a full-time job. The traditional way of handling this, manual checklists and periodic audits, is not only slow and expensive but also dangerously prone to human error.

This is where AI completely changes the game. Instead of treating compliance as a once-a-year event, AI turns it into an automated, continuous process that hums along in the background, 24/7. Think of it as a tireless digital compliance officer, ensuring data handling policies aren’t just sitting in a binder but are actively enforced across your entire organisation.

AI-powered systems can watch every single interaction with patient data in real time. This means they can instantly flag and even block actions that violate your rules, like an unauthorised attempt to download a huge batch of patient records or move sensitive data to an unsecured device. This kind of proactive enforcement dramatically cuts the risk of a costly compliance breach.

Generating Audit Trails and Ensuring Data Sovereignty

When the regulators show up, you need a clear, comprehensive audit trail, and you need it now. Piecing this evidence together manually can take weeks and pull staff away from their real jobs. AI handles this automatically. It can generate detailed, unchangeable logs of who accessed what data, when they did it, and from where, giving you a transparent and defensible compliance record on demand.

That level of detail is crucial, but for any Canadian organisation, there's another, even more critical piece of the puzzle: data sovereignty. To comply with our privacy laws, patient data often has to be stored and processed right here in Canada, shielding it from foreign laws and access requests.

AI is a huge help in enforcing this.

-

Monitoring Data Flow: AI tools can track how data moves across your networks, making sure protected health information (PHI) never leaves Canadian servers without proper authorisation.

-

Automating Geolocation Policies: It can automatically apply rules that restrict data access based on geography, which makes managing data residency requirements a whole lot easier.

This level of automation is a key part of modern cybersecurity compliance solutions, which are built specifically to handle the unique regulatory pressures of the Canadian healthcare sector.

From Manual Burden to Strategic Advantage

Automating these compliance tasks does more than just lower your risk profile; it frees up your people. Your IT and compliance teams can stop spending their days buried in audit logs and start focusing on bigger things, like improving patient care systems or strengthening your overall security.

By taking over the repetitive, rule-based work of compliance, AI lets healthcare organisations shift from a reactive, "check-the-box" mindset to a proactive, risk-based security strategy. It ensures that compliance is a continuous state, not just a periodic goal.

This is a massive operational win. Of course, to protect patient data effectively, it’s also important to look at frameworks beyond just national laws. For a deeper look into another key framework, you can learn more by understanding SOC 2 compliance in the era of AI-driven threats.

Bringing AI into your compliance framework isn't just about avoiding fines; it's about building and maintaining trust with your patients. As we've explored further in our guide, AI in healthcare data privacy in Canada has become a critical topic for providers who want to keep that trust intact. In an age where a single breach can have devastating consequences, proving you're a responsible steward of sensitive information is everything.

Getting this right requires a smart implementation plan. It's not about just plugging in a single tool but about weaving intelligent automation into the very fabric of your organisation’s data governance. Pulling off this kind of sophisticated integration often requires a mix of deep healthcare knowledge and great technical skill, which is why many organisations partner with experts in software development services to build a secure and compliant digital foundation from the ground up.

A Practical Roadmap for Implementing AI Security

Bringing AI into your existing security setup can feel like a monumental task. The good news? It doesn't have to be. For healthcare organisations, the secret is to move deliberately through a few key phases instead of trying to boil the ocean with a massive, one-time overhaul. This roadmap breaks it all down, guiding you from a baseline assessment to a fully scaled-up and successful implementation.

The first, and arguably most important, step is to conduct a comprehensive security assessment. Before you even think about new technology, you need a brutally honest picture of your current security posture. This means digging in to identify existing vulnerabilities, mapping out exactly how sensitive data moves through your systems, and zeroing in on the areas carrying the most risk. Think of this assessment as the foundation; it’s the benchmark you'll use to measure just how effective your AI security tools really are.

Once you have that clear-eyed view of your security landscape, you can move on to establishing strong data governance protocols. This is the critical groundwork that ensures your AI models have high-quality, properly managed data to learn from. Without it, even the most sophisticated algorithms are bound to fail.

Building a Strong Foundation

Getting data governance right isn’t just an IT checklist item; it’s a strategic effort that needs buy-in from across the entire organisation. It's all about creating clear, unambiguous rules for how data is handled, classified, and accessed.

Key governance activities usually include:

-

Defining Data Policies: You need to clearly establish who can access what data, for what reason, and under which specific conditions.

-

Classifying Sensitive Data: The system should automatically tag and categorise information based on its sensitivity level, making sure your most high-risk data gets the Fort Knox treatment it deserves.

-

Ensuring Data Integrity: This is about putting processes in place to maintain the accuracy and consistency of your data. It’s the only way to avoid the classic "garbage in, garbage out" problem that can completely derail an AI model.

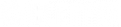

The flowchart below shows how an automated process can continuously monitor, audit, and enforce these kinds of governance policies.

What this really shows is how AI can shift compliance from a static, once-a-year audit to a dynamic, real-time function that’s always on, actively protecting your data.

Selecting Partners and Planning the Rollout

With a solid governance framework in place, you’re ready to start looking at AI vendors and technology partners. It’s absolutely vital to choose partners who have deep, proven experience in the Canadian healthcare sector; they need to understand the unique compliance and privacy challenges we face here. Building effective custom healthcare software solutions is a specialised skill; it demands more than just technical chops, requiring a real understanding of clinical workflows and our regulatory environment.

Instead of a risky "big bang" launch, always opt for a phased rollout. Start small with a focused pilot project in a controlled environment. A pilot is your chance to demonstrate the value of AI security quickly, test how well it works in your world, and gather critical feedback without putting your entire operation at risk.

A successful pilot project is your single best tool for getting wider stakeholder buy-in. When you can walk into a boardroom and show tangible results, like a 40% reduction in false-positive security alerts or the detection of a threat that was previously invisible, it becomes a whole lot easier to justify a larger investment.

Finally, don't forget the human side of the equation. Any new system is only as good as the team running it. You have to invest in comprehensive training to make sure your IT and security staff understand how to manage the system, interpret its insights, and respond to the alerts it generates. As we’ve explored in guides on software development services, a well-planned strategy is the key to success. This methodical approach ensures your journey into AI in healthcare data security isn’t just a one-off project, but a sustainable part of your long-term security posture.

Looking Ahead: The Next Chapter in Healthcare Cybersecurity

The world of AI in healthcare data security isn't static. It’s a fast-moving field where both attackers and defenders are constantly levelling up. As we look to the future, a new set of challenges and opportunities is already taking shape, pushing healthcare organisations to build security that’s not just strong, but also nimble and ready for what’s next.

You could almost call it a cybersecurity arms race. On one side, attackers are honing their own AI-powered tools, like malware that can rewrite its own code on the fly to avoid getting caught. We're also seeing more sophisticated social engineering tactics, including deepfakes that can mimic a senior doctor’s voice or video to trick staff into handing over sensitive credentials.

The Shift Towards Explainable AI in Security

To fight back against these smarter threats, the industry is making a crucial pivot toward Explainable AI (XAI). One of the biggest frustrations with earlier AI security models was their "black box" problem; they could flag an issue, but couldn’t tell you why. This made it incredibly difficult for human analysts to trust the alerts or figure out what really happened after an incident.

XAI flips that script completely. It’s built from the ground up to give clear, human-readable reasons for its actions. So, instead of a vague alert like "suspicious login detected," an XAI system might report: "This login was flagged because it came from a new country, happened at 3 a.m. local time, and tried to access a patient file type the user has never opened before."

This kind of transparency is a massive leap forward for security teams. It builds trust in the technology, lets analysts respond faster and more accurately, and provides the detailed audit trail needed for compliance.

Quantum Computing and the Threats on the Horizon

Looking even further out, the rise of quantum computing poses a monumental threat to our current security standards. Quantum computers will eventually become powerful enough to shatter the encryption that protects nearly everything we do digitally, including electronic health records. While we’re still a few years away from that reality, the time to start preparing is now.

To build a security posture that can stand the test of time, healthcare organisations should be focusing on a few key areas:

-

Continuous Learning: Security tools need to constantly ingest new data and adapt to new threats. This is where AI truly shines.

-

Proactive Threat Hunting: Don’t just wait for alarms. Use AI to actively hunt for hidden threats and subtle anomalies inside your network.

-

Building a Culture of Security: Technology alone isn’t a silver bullet. Every single person, from the front desk to the C-suite, has a role to play in protecting patient data.

Creating this kind of forward-thinking defence takes real technical know-how. At Cleffex, staying ahead of these curves is what we do. Our team is focused on pioneering secure and innovative custom healthcare software solutions and offering top-tier software development services. We know that genuine security is a blend of smart technology and strategic planning. As we help shape the future of cybersecurity, our commitment to expertise and trust is at the core of our work.

Frequently Asked Questions

As you start exploring AI for healthcare data security, it's natural to have questions. Here are a few of the most common ones we hear from organisations trying to figure out where to start.

How Does AI Specifically Help with HIPAA Compliance?

Think of AI as a tireless digital watchguard for your patient data. It strengthens HIPAA compliance by constantly monitoring who is accessing Protected Health Information (PHI) and how. If someone's behaviour seems unusual, like a nurse suddenly downloading hundreds of patient files at 3 AM, the AI can flag it instantly. This goes a long way in preventing a potential breach before it happens.

AI also automates the tedious parts of compliance. It can generate the detailed audit logs you need to have on hand and even help classify data on the fly, making sure sensitive PHI gets the right level of protection. This automation cuts down on human error, which is a major source of compliance slips, and helps you build a more robust defence as part of your overall cybersecurity compliance solutions.

Can Small Clinics Afford to Implement AI for Data Security?

Absolutely. The old idea that AI requires a massive, in-house server farm and a team of data scientists just isn't true anymore. With AI-as-a-Service (AIaaS) and cloud-based security tools, even smaller practices can access powerful capabilities without a huge upfront investment.

You don't have to boil the ocean. A small clinic could start with a specific, high-value solution, like an AI-powered tool for threat detection on their network. Working with a partner to develop targeted custom healthcare software solutions can also make it much more affordable. This approach lets you scale your security measures as your practice grows, matching your investment to your needs.

What Is the Biggest Risk of Using AI in Healthcare Security?

One of the most significant risks is something called an "adversarial attack." This is a sophisticated trick where an attacker deliberately feeds the AI misleading information to fool it. For example, they might disguise a piece of malware to look like a harmless file, causing the AI to let it through your defences.

Another major concern is data bias. If an AI model is trained on data that isn't truly representative of your patient population, it can lead to skewed and ineffective security rules. These risks aren't deal-breakers, but they highlight the need for careful model testing, validation, and ongoing monitoring to keep the system honest.

How Do We Choose the Right AI Security Vendor?

When you're vetting potential partners, look for deep healthcare experience first. They need to understand the nuances of compliance and the incredible sensitivity of the data you're protecting. Don't just settle for a generic tech vendor.

Transparency is also key. The vendor should be able to explain how their AI works and why it makes the decisions it does – no "black box" solutions. Make sure their platform can integrate smoothly with the systems you already have in place. Ask for real-world case studies, scrutinise their data privacy policies, and check what kind of support and training they offer. Choosing the right partner is often the most critical step in any project delivered via software development services. At Cleffex, this is where our industry focus really comes into play, something we've built our reputation on. You can read more about our journey on our about us page.